It started with bytes, kilobytes, and megabytes. Then gigabytes and terabytes. Now, it’s zettabytes (1 billion terabytes), yottabytes (1,000 zettabytes), and more. The rapid rate of data generation in recent years means new terms need to be created to quantify data.

These days, more than 300 quadrillion (300,000,000,000,000,000) bytes of data are created globally every day1. Emails, online video, social media, web searches, Internet of Things (IoT) devices and more all contribute to today’s data explosion. With the arrival of the AI era and emergence of applications such as ChatGPT, Sora, and Midjourney, the pace of data generation will only continue to grow. So, how can the world handle and manage all this data? The answer lies in high-performance semiconductor memory.

1Source: “Volume of data/information created, captured, copied, and consumed worldwide from 2010 to 2020, with forecasts from 2021 to 2025,” Statista (June 2021). The 2023 figure is a forecast and has been converted into a daily total.

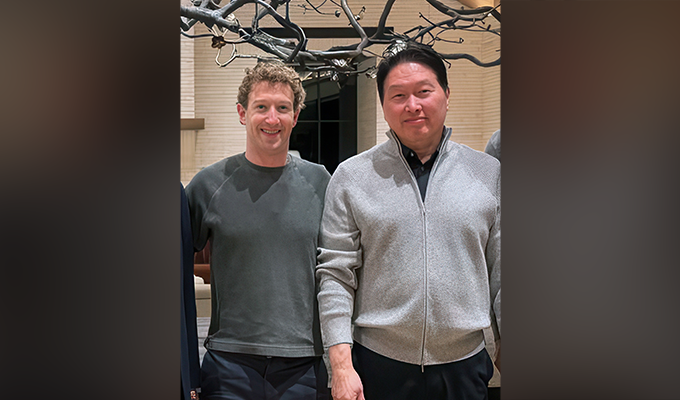

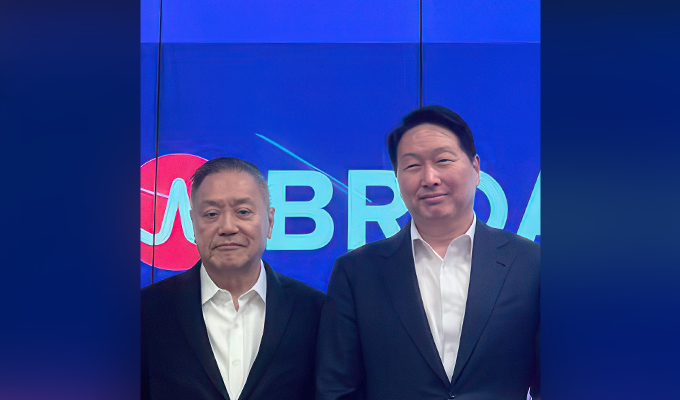

As highlighted in the brand film above, memory is required to store, process, compute, and learn the world’s data. Today, SK hynix is the leading memory solution provider, offering the world’s fastest and most advanced products that are critical for AI systems.

How Memory Unlocks Advanced AI Performance

In the age of AI, semiconductor memory is essential for the smooth operation of AI systems. This is shown in memory’s role in large language models (LLMs)2, which are trained on large datasets to improve their performance. Memory products enable the storage and quick retrieval of these datasets, allowing AI systems to efficiently process data. Furthermore, memory solutions with high bandwidth and capacity accelerate the training process of these systems by rapidly accessing and handling data. Trained AI models need to then make predictions, process new data, or generate an output in real-time applications. This is where memory solutions enable rapid access to pre-trained models and associated data, allowing for quick inference3 and response times.

2Large language model (LLM): As language models trained on vast amounts of data, LLMs are essential for performing generative AI tasks as they create, summarize, and translate texts.

3AI inference: The process of running live data through a trained AI model to make a prediction or solve a task.

As a market leader, SK hynix offers high-performance AI memory products that are crucial for AI training, inference, and more. All of these solutions are optimized to meet the growing needs of AI systems.

SK hynix’s Next-Gen Memory for Tomorrow’s AI Systems

The increasing complexity and growing inference needs of AI applications have led to the emergence of more sophisticated models, larger datasets, and higher data processing requirements. To satisfy these high demands, SK hynix has been developing total AI memory solutions capable of enhancing AI performance through rapid data transfers, increased bandwidth, and maximum energy efficiency.

These products include the company’s next-generation AI server solutions, such as the world’s best-performing HBM3E. This ultra-high performance AI memory solution offers industry-leading data processing speeds and high capacity—making it ideal for AI training servers in data centers. Having already gained the biggest share of the HBM4 market, SK hynix will further its leadership in the field through the mass production of HBM3E.

Another key AI server product from SK hynix is CXL®5, a standardized interface that elevates the utilization of CPUs, GPUs, accelerators, and memory. As it can expand memory as well as increase system bandwidth and processing capacity, CXL is suited for handling the high performance demands of AI servers. Furthermore, the QLC6-based enterprise SSDs (eSSDs) developed by SK hynix’s U.S. subsidiary Solidigm offer industry-leading performance and massive capacity. These SSDs are therefore an optimal solution for AI servers that require immense storage space.

4High Bandwidth Memory (HBM): HBM is a high-value, high-performance product that revolutionizes data processing speeds by connecting multiple DRAM chips with through-silicon via (TSV).

5Compute Express Link (CXL): A PCIe-based next-generation interconnect protocol on which high-performance computing systems are based.

6Quadruple Level Cell (QLC): A form of NAND flash memory that can store up to 4 bits of data per memory cell. As QLC contains four times more data than a single level cell, it can facilitate realization of high capacity and raise cost efficiency.

In addition to these server products, SK hynix also offers a range of on-device AI7 solutions. As the world’s fastest mobile DRAM, LPDDR5T8 is optimized for mobile devices. Offering breakneck operating speed and unparalleled low-power and low-voltage characteristics, LPDDR5T meets the demands for high-performing and energy-efficient mobile DRAMs.

SK hynix’s latest GDDR9 solution is also set to be integral for on-device AI PCs. GDDR7 offers significant improvements in bandwidth and power efficiency from its predecessor GDDR6, enabling it to handle rapid and large-capacity data processing for AI inference. Additionally, the company’s PCIe10 Gen5 SSD PCB01 will be a gamechanger for on-device AI PCs. Boasting the industry’s highest sequential read speed and a rapid sequential write speed, PCB01 can load LLMs required for AI learning and inference in less than one second.

7On-device AI: The performance of AI computation and inference directly within devices such as smartphones or PCs, unlike cloud-based AI services that require a remote cloud server.

8Low Power Double Data Rate 5 Turbo (LPDDR5T): Low-power DRAM for mobile devices, including smartphones and tablets, aimed at minimizing power consumption and featuring low voltage operation. LPDDR5T is an upgraded product of the 7th generation LPDDR5X and will be succeeded by the 8th generation LPDDR6.

9Graphics DDR (GDDR): A standard specification of graphics DRAM defined by the Joint Electron Device Engineering Council (JEDEC) and specialized for processing graphics more quickly. It is now one of the most popular memory chips for AI and big data applications.

10Peripheral Component Interconnect Express (PCIe): A high-speed input/output interface with a serialization format used on the motherboard of digital devices.

The Unseen Power Behind AI

As the leading AI memory solution provider, SK hynix is committed to driving innovation in AI memory technology to meet the needs of companies worldwide. Having already started mass-producing HBM3E, the company plans to begin mass production of the next-generation HBM4 in 2025. In the AI era when data storage and usage requirements are only increasing, SK hynix’s high-performance solutions are the unseen power enabling AI systems for our data-hungry world.