The daily life of having intellectual discussions with a human-like robot and having an emotional connection through small conversation with it. A society where humans are not exposed to danger as robots take over dangerous tasks at construction or disaster sites. A world where simple and repetitive work such as housework is left to robots and only work that requires creativity and thinking ability is done by humans. A world where the area of human activity has increased dramatically by pioneering space with the help of robots. These are some of the scenes of the future that semiconductors are expected to bring to us in the near future.

What is the Current Status of Humanoid Technology?

Humanoid is a compound word of “human” and the suffix “-oid”, which means “resembling”, meaning “a robot with an intelligence and body like those of humans”. While it appeared in sci-fi movies as something that only exists in the imagination, it is expected to appear in daily life in the future, with the development of advanced science and technology.

How close is the future where humanoids appear in our daily lives? The first humanoid that succeeded in bipedal walking is known as “WABOT-1” developed under Professor Ichiro Kato of the Waseda University in 1973. It was only a few steps, but it could walk on two feet. Since then, robot technology, which only allowed walking a few faltering steps, has continued to develop remarkably. In recent years, it has evolved to the level that robots can move away from obstacles on their own or run.

The function of humanoids continues to improve in terms of human interaction as well. Some of the latest humanoids such as SoftBank’s “Pepper” in Japan and Hanson Robotics’ “Sophia” in Hong Kong use a camera and an audio recognition program to recognize the human’s facial expressions and voices in conversations. Then, these are analyzed by AI algorithms, allowing them to make natural conversations with humans and even express some emotions.

Korea’s first humanoid robot Hubo appears as a news anchor to present news

(Source: Korea Advanced Institute of Science and Technology (KAIST)).

One of the very first and most representative humanoids made with Korean technology is the “Hubo” series by the Korea Advanced Institute of Science and Technology (KAIST). In 2015, in DARPA Robotics Challenge (DRC), a competition of robots especially for disaster response, Hubo surpassed the world’s leading humanoids and took first place, demonstrating Korea’s high level of robotics technology to the world. Since then, Hubo once again became a hot topic as a torch relay runner at the PyeongChang 2018 Olympic Winter Games, and this year, it appeared as a news presenter on Taejon Broadcasting Corporation (TJB).

The market prospects of humanoids are also bright. According to the market research institute “Report & Report”, the humanoid market is expected to grow to the size of USD 3.9 billion in 2023.1 Another market research institute called “Mordor Intelligence” also predicted that the related market will grow to around USD 3.3 billion volume in 2024.2

Of course, it will still take some time until the related technology reaches the commercialization stage where humanoids are actively utilized in our daily lives. This is because there are still many challenges to overcome by current science and technology. Current humanoids have limited driving time and space for movement due to battery problems and the level of artificial intelligence is still insufficient compared to humans. Most of them look more like a machine than a person. Also, for the storage and processing of data, most of them adopt a method of connecting online with an external cloud rather than a method of utilizing data stored in them. This suggests that stability enough to cope with countless variables in our daily lives is not secured yet.

The Appearance of Future Humanoids – “Electronic Skin” with the Same Touch and Appearance as Human Skin

To make robots look similar to humans, it is essential to have an “electronic skin (e-skin)” that resembles human skin in all aspects, from color and shape to touch. In the past, research and development in the field of e-skin have been focusing on medical purposes rather than applications to robots.

Research on e-skin began in earnest in early 2000. In the early days, the e-skin in the form of mounting sensors in silicon was mainly studied, but this was insufficient to reproduce the flexibility of the human skin. Later, with the development of organic materials containing carbon compounds such as graphene, the research began to gain momentum. In particular, graphene is evaluated as the most suitable material for e-skin as it has a very high level of thermal conductivity and excellent electron mobility, and is transparent and flexible in material. Since then, the efforts of researchers all over the world have been made and related research results have been poured out over the past three to four years.

In 2017, a research team at the University of Glasgow’s School of Engineering unveiled an e-skin that uses solar energy and graphene and has a soft outer surface, with a capability of sensing external stimuli through an embedded electronic chip. The research team made a sensor similar to the skin tactile receptor, implanted it into the e-skin, and realized a method of absorbing solar energy and converting it into electrical energy.

In February 2018, the University of Colorado’s research team led by Professor Jianliang Xiao and Professor Wei Zhang unveiled an e-skin that is self-healable when it is damaged. The research team created the electronic skin with the main material of “polyimine”, a polymer compound that is easily recovered even in the case of a scratch, by repeating the double bond of carbon and nitrogen. Also, silver nanoparticles were added for stability and strength.

A schematic diagram of an artificial sensor that mimics the generation of tactile pain

signals and signal processing-based artificial pain signal generation

(Source: Daegu Gyeongbuk Institute of Science and Technology (DGIST))

In the e-skin field, Korean scientists are showing excellent competitiveness. For example, in July 2019, a research team led by Professor Jae-eun Jang, Department of Information and Communication Engineering at DGIST developed a tactile sensor that can feel pain and temperature like humans, through joint research with research teams led by Professor Cheil Moon, Department of Brain and Cognitive Science, Professor Ji-woong Choi, Department of Information and Communication Engineering, and Professor Hongsoo Choi, Department of Robotics Engineering, respectively. In particular, the development of a sensor that can feel pain means that it can has secured the means to control the aggressiveness of robots in humanoid development.

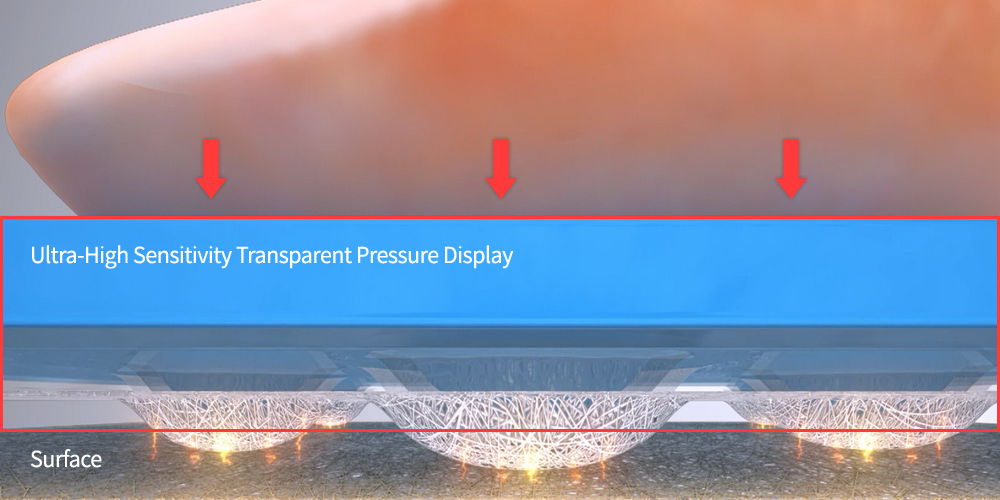

An ultra-high sensitivity transparent display (20 times higher sensitivity than previous displays) that reads the surface information of an object (Source: Flexible Electronic Device Laboratory, Realistic Device Source Research Division, Electronics and Telecommunications Research Institute (ETRI))

This year, the related research results of Korean researchers were remarkable as well. In February, a joint research team of the Realistic Device Source Research Division of Electronics and Telecommunications Research Institute (ETRI), Department of Computer Science and Engineering and Soft Robotics Research Center (SRRC) of Seoul National University developed an ultra-high sensitivity transparent pressure sensor that can detect minute changes in pressure and identify three-dimensional surface information of the object to which pressure is applied.

The field of electronic skin has shown meaningful research results centered on the academic field only recently, so it takes time for commercialization by companies. Market experts, however, are pointing out that the electronic skin market has great growth potential. The global market research institute “Market Report” predicted that the size of the related market will record 17.2% Compound Annual Growth Rate (CAGR) from 2019 to 2024.3

The Brain of Future Humanoids – “Neuromorphic Computing” That Challenges Human Brains

What are the key technologies in the development of humanoids? There may be different answers, but artificial intelligence (AI) technology cannot be left out. This is because humanoids should be able to recognize, think, and judge just like humans, in order to play a role similar to that of humans in terms of behavior, as well as appearance.

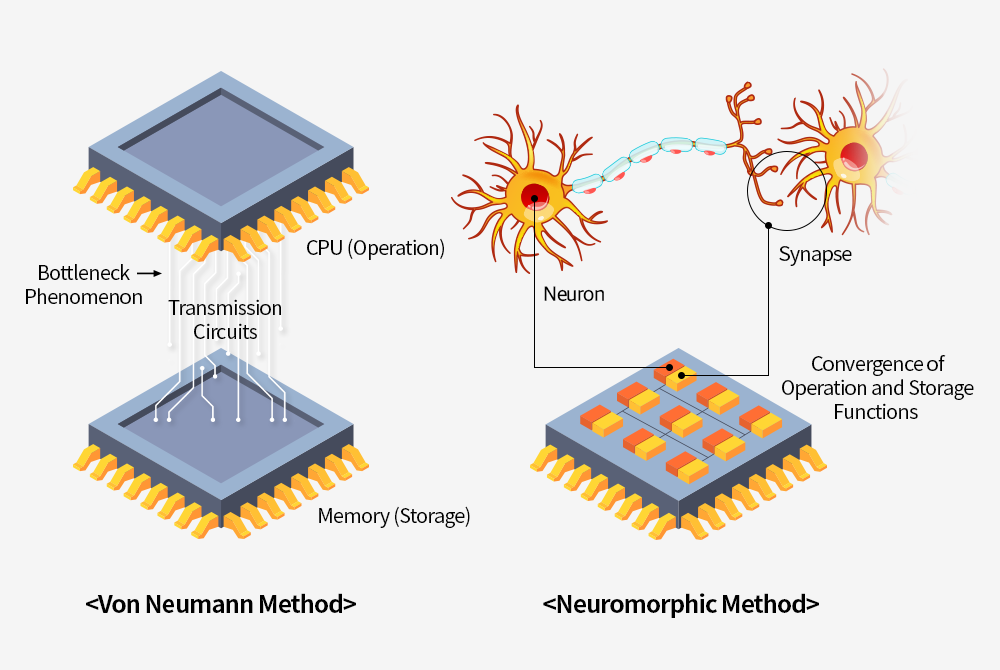

With the current von Neumann architecture4, it may be possible to implement a large supercomputer; however, there is a limit to implementing an artificial intelligence system mounted on a small humanoid with a human-like appearance. This is because, while artificial intelligence algorithms based on the current “deep neural networks (DNNs)5” have proven their performance of a great level similar to or beyond humans in man complicated cognitive tasks, the energy efficiency of computing systems for implementing these algorithms is still significantly different to the human brain.6

In addition, as the von Neumann architecture consists of a central pressing unit (CPU) that performs calculations and a memory that stores data, a bottleneck is caused in the process of processing and storing data between the CPU and memory. Although various attempts are being made to improve the efficiency of machine learning7, the era of semiconductor technology innovation under Moore’s Law8 is now considered to be approaching its end. Accordingly, improvement in performance and power efficiency that can be expected with the existing method is not significant.

Accordingly, the “Neuromorphic9 computing method” that can overcome this limitation by developing a semiconductor resembling the information processing method of the human brain is now being studied. In the human brain, neurons and synapses are in a parallel structure, so there is no need to worry about bottlenecks. Based on this, an attempt to develop a neuromorphic semiconductor composed of multiple “cores” in which several electronic devices including transistors and memories are mounted on silicon.

Some devices in the core play the role of neurons, which are nerve cells in the brain, and the memory chips act like synapses that connect neurons. If they are organized in a parallel structure like in a human brain, a larger amount of data can be processed with much less power. Also, as it is possible to learn and operate like a human brain, complex calculations and reasoning can be processed efficiently. This is a step closer to realizing artificial intelligence in a true sense of self-learning and thinking abilities.

Intel is considered to be the leader in the neuromorphic semiconductor development field. Following last year when Intel introduced its neuromorphic semiconductor called “LOIHI”, it unveiled the latest neuromorphic computing system called “Pohoiki Springs”, which is implemented based on LOIHI. With 130,000 neurons, ROIHI is a neuromorphic semiconductor with up to 1,000 times faster processing speed and up to 10,000 times higher work efficiency compared to traditional methods. Pohoiki Springs combines 768 LOIHI chips to have around 100 million neurons, which is the level of a brain of a small mammal.

In addition, Intel built its own computing system by developing a mathematical algorithm that implemented an animal’s olfactory system, together with a research team from Cornell University in the US. In July this year, it also succeeded in realizing visual and tactile functions with the neuromorphic computing method with a research team at the National University of Singapore. These accomplishments suggest that the five senses of humans are being implemented one by one, going a step further from equipping an artificial intelligence system with simple computational functions.

With the progress of such research, it is expected that an artificial intelligence system will be able to become the brains of humanoids in the near future.

Storage of Future Humanoids – “DNA Memory” Capable of Storing 1 Billion GB in 1㎟

Just as it is difficult to estimate the amount of information required for humans to live as humans, it is not easy to accurately estimate the amount of data required for humanoids to live like humans. Nonetheless, as humanoid technology is a technology in which advanced future technologies such as AI, big data, and robotics are integrated, a storage device capable of storing an enormous amount of data is required in order to realize this in reality.

Considering the amount of data required to implement artificial intelligence, it is expected to be difficult to contain all the data required to drive humanoids only with the current NAND flash-based hard disk drives (HDDs) or mass solid-state drives (SSDs). For this reason, the method of storing data in a separate data center or a cloud and connecting it with the network is promising. Considering variables such as a disaster situation where it is difficult to maintain the network, however, there is a possibility of malfunction, which leads to a concern of stability. Therefore, humanoids are more likely to be equipped with the next-generation storage devices implemented with innovative future semiconductor technology rather than today’s storage devices.

The most promising option is “DNA memory”. The reason why DNA memory is attracting attention among next-generation memory technologies is simple. This is because DNA has a higher data storage density than any other material on the globe. Theoretically, DNA can store about 1 billion GB of data per ㎟. Also, DNA is a means of storing genetic information and the storage period is semi-permanent.

The key to realizing the DNA memory is to realizing technology of converting data based on the existing binary method consisting of 0 and 1 into the DNA’s sequence of four types of bases including adenine (A), guanine (G), cytosine (C), and thymine (T).

Among the four bases, A has a structure that complementarily binds with T and G with C, so the combinations of A and T, and of C and G are set to 0 and 1, respectively, so that the digital information of 0 and 1 can be encoded with DNA base sequence. The DNA base sequence encoded like this can be artificially created through DNA synthesis in a machine, and can be encapsulated for storage. The process of reading data is the other way round. First, the capsule is removed (DNA release) and the DNA sequence is read with a DNA decoding device (Sequencing). When the sequence read in this way is translated back into 0 and 1, the data can be restored identically to the original data.10

The first to simulate the biological DNA structure with an artificial DAN was the research team led by Professor George Church at Harvard University. In 2012, the team created artificial DNA from chemical polymers and devised a method of representing A and C as 0, and G and T as 1, for the storage of binary-based data. Afterwards, the team converted the data to be stored into artificial DNA and arranged them on a microchip, developing the first DNA memory.

Among companies, Microsoft, Intel, and Micron Technology are in the leading group, and Microsoft is leading the way among them. Last year, Microsoft introduced a system that can automatically translate digital information into genetic code and retrieve it again. Although it takes 21 hours just to store and re-read the 5-byte data, it is evaluated as a step further toward commercialization in that it has built an automated system for reading and writing using DNA semiconductors.

As mentioned above, leading global researchers are continuing their research, and recently, government-led projects have also been launched. Accordingly, it is expected that “DNA memory” will be commercialized in the near future. In Korea, likewise, “DNA memory development” was selected as a national project of “Disruptive Innovation Project” by the Ministry of Science and ICT (MSIT) in July. Also, in the US, a consortium for DNA memory development was organized by the Intelligence Advanced Research Projects Activity (IARPA), and U$48 million was invested this year alone. If the efforts to develop future memory technologies achieve the desired results quickly, it is also possible that humanoids are commercialized earlier than expected.

1 Blog of Ministry of Economy and Finance of Korea (http://blog.naver.com/mosfnet/221971807614)

2 SAMJONG KPMG Newsletter (December 2019) (https://home.kpmg/kr/ko/home/newsletter-channel/201912/emerging-trends.html)

3 Webzine (Vol. 158) of the Electronics and Telecommunications Research Institute (ETRI) (https://www.etri.re.kr/webzine/20200814/sub01.html)

4 A general-purpose computer structure with a built-in program with CPU, memory, and program structure

5 An artificial neural network (ANN) composed of several hidden layers between an input layer and an output layer; Currently, the latest AIs are implemented based on DNN.

6 Trend of AI Neuromorphic Semiconductor Technology, Kwang-il Oh, Sung-eun Kim, Younghwan Bae, Kyunghwan Park, Youngsu Kwon, Electronics and Telecommunications Research Institute (ETRI) (2020)

7 Technology or system (program) that allows artificial intelligence to learn data and make predictions on its own

8 The rule that the amount of data that can be stored on a semiconductor chip doubles every 24 months

9 Neuromorphic. Specific shape or form (Morphic) of neurons

10 DNA Application Technology Trends, Jae-ho Lee, Do-young Kim, Moon-ho Park. Youn-ho Choi, Youn-ok Park, Electronics and Telecommunications Trends V.32 No.2 (2017)

References

Overseas Research Trends of Humanoid Robots, Yisu Li, Jiseob Kim, Sanghyun Kim, Jieun Lee, Mingon Kim, Jaehong Park, Korea Robotics Society (2019)

Official website of Hanson Robotics (https://www.hansonrobotics.com)

Official website of Boston Dynamics (https://www.bostondynamics.com)

Official website of SoftBank Robotics Korea (https://softbankrobotics.com)

Official website of Korea Advanced Institute of Science and Technology (KAIST) (https://www.kaist.ac.kr)

Electronic Skin Technology Trends, Yong-taek hong, Jeong-hwan Byeon, Eun-ho O, Byeong-mun Lee, The Korean Information Display Society (2016)

Official website of the University of Colorado Boulder (https://www.colorado.edu)

Official website of the University of Connecticut (https://today.uconn.edu)

Official website of the Daegu Gyeongbuk Institute of Science and Technology (DGIST) (https://www.dgist.ac.kr)

Official website of Hanyang University

Official website of the Electronics and Telecommunications Research Institute (ETRI) (https://www.etri.re.kr)

Official website of the National Research Foundation of Korea (NRF) (https://www.nrf.re.kr)

Official website of Intel (https://newsroom.intel.com/press-kits/intel-labs/)

Trend of AI Neuromorphic Semiconductor Technology, Kwang-il Oh, Sung-eun Kim, Younghwan Bae, Kyunghwan Park, Youngsu Kwon, Electronics and Telecommunications Research Institute (ETRI) (2020)

The Present and Future of Neuromorphic Devices, Jong Gil Park, The Korea Institute of Science and Technology Information (2017)

Neuromorphic Hardware Technology Trends, Doo Seok Jeong, The Korean Physical Society (2019)

Official website of the Columbia University in the City of New York (https://magazine.columbia.edu)

DNA Application Technology Trends, Jae-ho Lee, Do-young Kim, Moon-ho Park. Youn-ho Choi, Youn-ok Park, Electronics and Telecommunications Trends V.32 No.2 (2017)

Official website of the Ministry of Science and ICT or Korea (https://www.msit.go.kr)