SK hynix showcased its enhanced AiM1-based AI memory solution at the AI Infra Summit 2025 in Santa Clara, California from September 9–11, highlighting its technological leadership in future AI services.

1Accelerator-in-Memory (AiM): SK hynix’s Processing-in-Memory (PIM) semiconductor product name, which includes GDDR6-AiM.

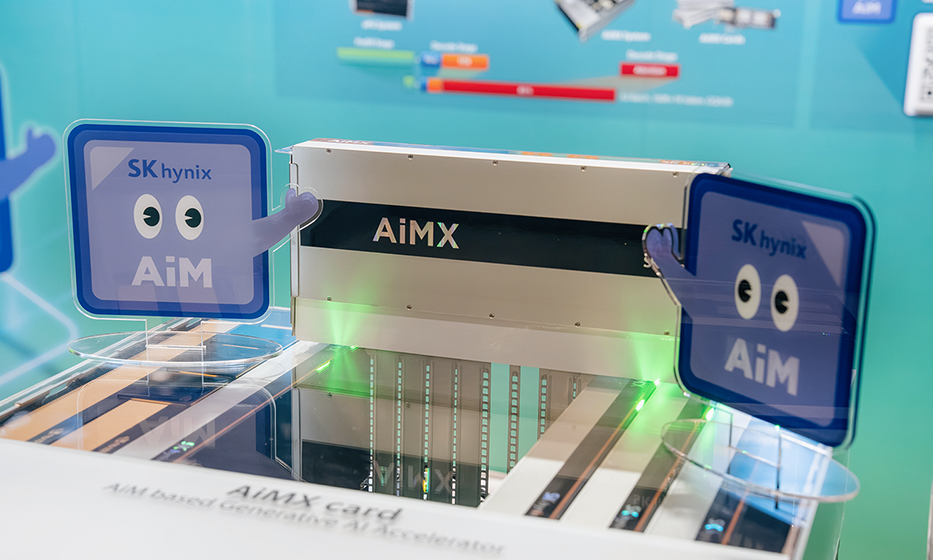

SK hynix’s booth at the AI Infra Summit 2025

Global AI industry leaders in enterprise and research gathered at the summit to demonstrate the latest hardware and software infrastructure technologies. Formerly known as the AI Hardware & Edge AI Summit, the event attracted more than 3,500 attendees and over 100 partners under the slogan “Powering Fast, Efficient & Affordable AI”.

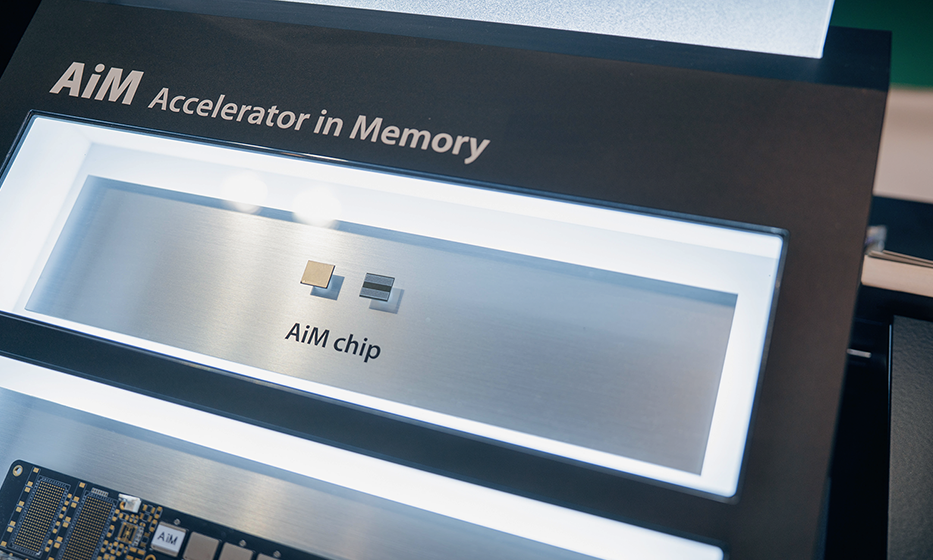

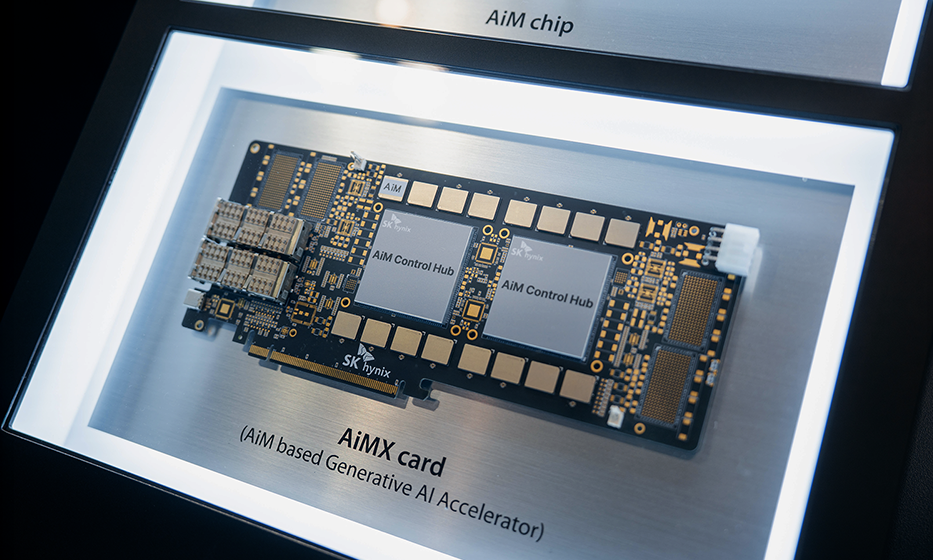

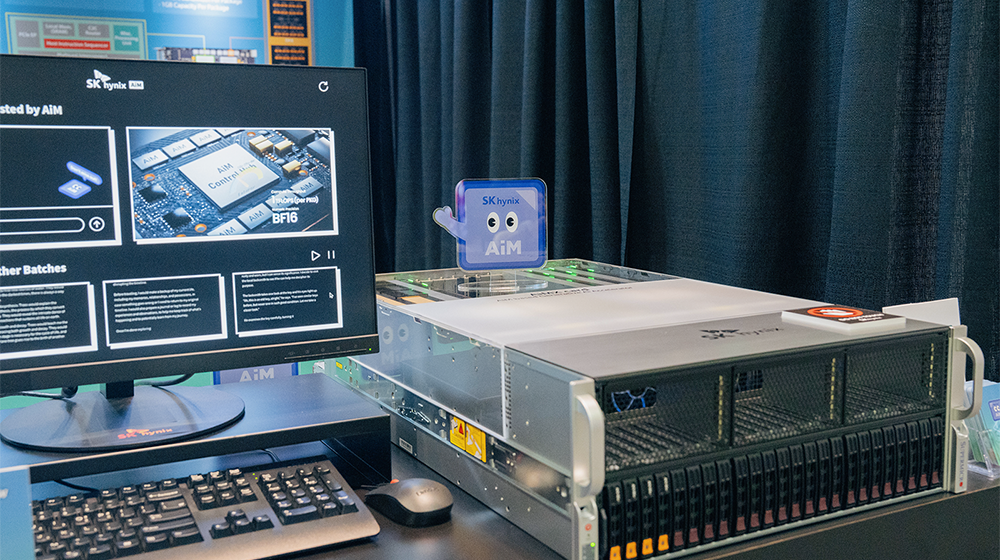

At the summit, SK hynix revealed the latest developments in its PIM2-based AiM solution, AiMX3. At its booth operated under the theme “Boost Your AI: AiM is What You All Need”, the company ran live demonstrations of a Supermicro server system (GPU SuperServer SYS-421GE-TNRT3) featuring two NVIDIA H100 GPUs and four of its AiMX cards. Visitors could see the system’s performance under real-world conditions, witnessing firsthand AiM’s technological capabilities and competitiveness.

2Processing-In-Memory (PIM): A next-generation memory technology that integrates computational capabilities into memory, addressing data movement bottlenecks in AI and big data processing.

3AiM-based Accelerator (AiMX): SK hynix’s accelerator card featuring a GDDR6-AiM chip which is specialized for large language models (LLMs) — AI systems such as ChatGPT trained on massive text datasets.

The demonstration also highlighted how to scale the memory wall4 in LLM5 inferences. In GPU-only systems, both compute-bound6 and memory-bound7 processes are handled together which can lead to inefficiencies as the user requests or context lengths increase. In contrast, an AiMX-based disaggregated inference system8 divides its workload into two distinct phases: the memory-bound stage executed on the AiMX accelerator and the compute-bound phase which runs on the GPU. This workload allocation enables the system to simultaneously process more user requests and longer input prompts.

4Memory wall: A bottleneck that occurs when memory access speed lags behind data processor speed.

5Large language model (LLM): An AI model trained on massive datasets that understands and generates natural language processing tasks.

6Compute-bound: A workload in which the overall processing speed is limited by the processor’s computational capabilities.

7Memory-bound: A workload in which the overall processing speed is limited by memory access speed.

8Disaggregated inference system: An architecture that allocates computational tasks based on different types of processing units, enabling a dualized structure for processing operations.

The new AiMX card features updated architecture and software

SK hynix also introduced the software enhancements to its AiMX card. The application of the vLLM9 framework widely used in AI service development not only increased functionality but also provided stable support for long token generation, even in reasoning models10 that involve complex inference processes.

9Virtual large language model (vLLM): An AI framework designed for efficient and optimized LLM inference.

10Reasoning model: An advanced AI model that can perform complex reasoning tasks beyond simple prompt responses.

With its updated architecture and enhanced software, the new AiM-based solution addresses cost, performance, and power consumption challenges in LLM service management while also reducing operational costs compared to GPU-only systems.

On the second day of the event, Vice President Euicheol Lim, head of Solution Advanced Technology, took to the stage for a presentation. Titled “Memory/Storage: Crushing the Token Cost Wall of LLM Service: Attention Offloading11 With PIM – GPU Heterogeneous System,” Lim’s session outlined methods for cutting token processing costs in LLM services and highlighted AI memory technology’s impact on the industries’ digital transformation.

11Attention offloading: A technique in LLMs/transformer models that distributes or caches portions of attention computations to external memory devices, alleviating the workload on GPUs or main memory.

The AI Infra Summit 2025 showcased the current state of AI infrastructure, spanning from hardware to data centers and edge AI industries. Looking ahead, SK hynix is committed to strengthening its technological leadership by delivering emerging AI memory solutions which tackle the challenges of future AI services.