SK hynix presented its cutting-edge DRAM, NAND, and AI memory solutions at FMS: the Future of Memory and Storage 2025 in Santa Clara, California from August 5–7, reinforcing its global memory leadership.

Now in its 19th year, FMS, previously known as the Flash Memory Summit, rebranded in 2024. It adopted the name the Future of Memory and Storage to reflect the transformative influence of the AI era. The event’s scope was also expanded from focusing solely on flash memory to covering the full spectrum of memory and storage technologies, including DRAM.

SK hynix’s booth at FMS 2025 attracted numerous visitors

As part of its journey to becoming a full stack AI memory provider, SK hynix showcased a variety of memory products and advanced technologies designed to power next-generation AI innovation. The company effectively highlighted these memory and storage solutions through a unified approach including keynote speeches, in-depth presentations, and engaging booth exhibits. This enabled global partners to experience SK hynix’s technological leadership firsthand, strengthening collaboration between them.

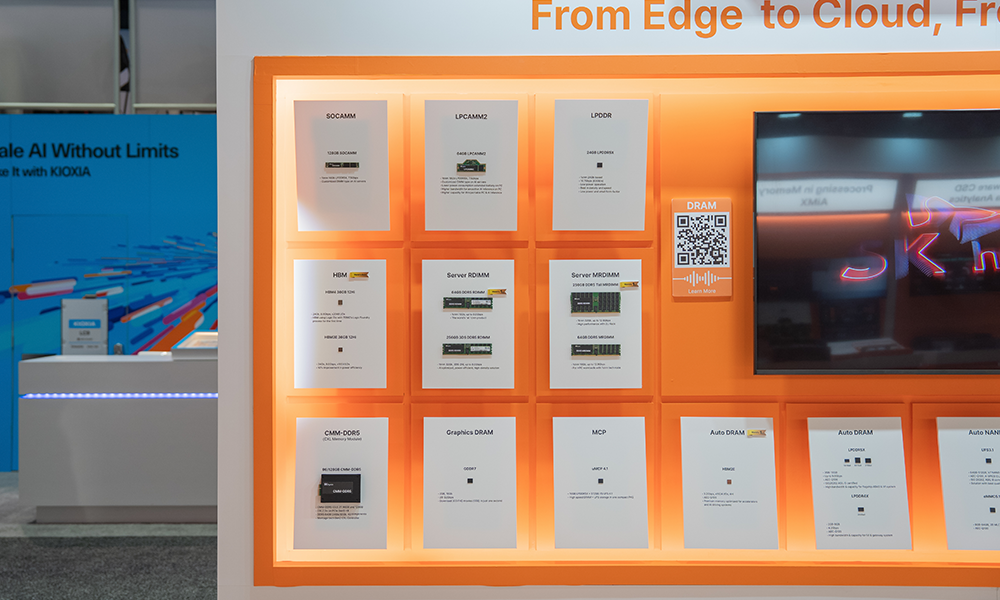

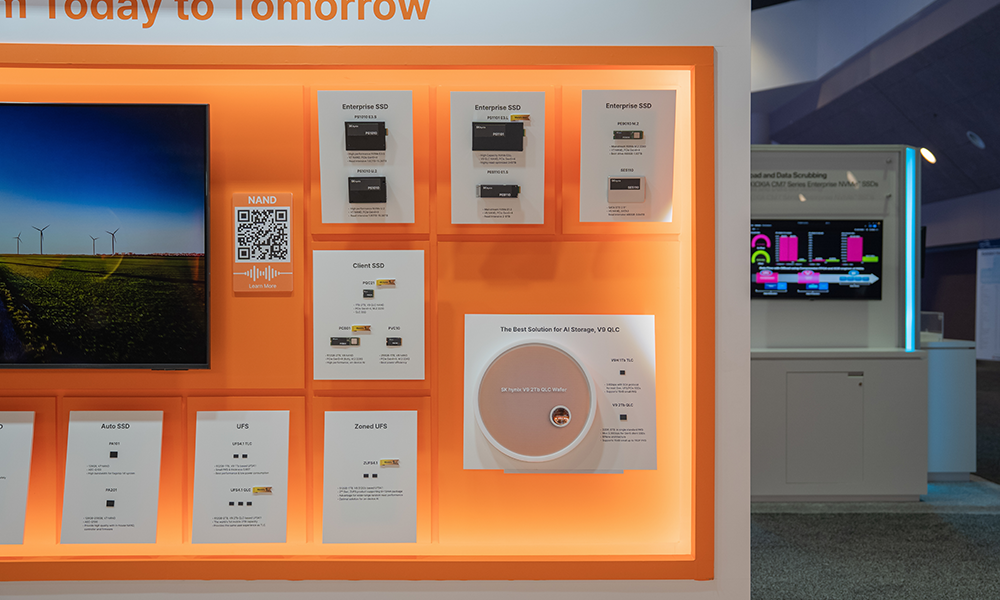

SK hynix’s groundbreaking DRAM and NAND products on display

At the Booth: A Broad Portfolio of Memory Optimized for the AI Era

SK hynix showcased products anticipated to lead the global AI memory market across the full spectrum of DRAM and NAND technologies. There were 14 DRAM-based products on display, including samples of the company’s 12-layer HBM41, which were delivered to major customers in March 2025 for the first time in the industry. Other highlights included LPDDR5X2, CXL3 Memory Module-DDR5 (CMM-DDR5), 3DS4 RDIMM, and Tall MRDIMM5.

1High Bandwidth Memory (HBM): A high-value, high-performance product that significantly enhances data processing speeds by vertically stacking multiple DRAM chips, surpassing the capabilities of traditional DRAM.

2Low Power Double Data Rate 5X (LPDDR5X): A high-value, high-performance product that significantly enhances data processing speeds by vertically stacking multiple DRAM chips, surpassing the capabilities of traditional DRAM.

3Compute Express Link (CXL): A next-generation solution designed to integrate CPU, GPU, memory and other key computing system components — enabling unparalleled efficiency for large-scale and ultra-high-speed computations.

43D Stacked Memory (3DS): A high-bandwidth memory product in which two or more DRAM chips are packaged together and interconnected using TSV technology.

5Multiplexed Rank Dual In-line Memory Module (MRDIMM): A server memory module product in which multiple DRAM chips are mounted on a substrate. Speed is enhanced by operating two ranks — the basic operating units of the module — simultaneously.

The company also presented a total of 19 NAND solution products. These included the PS1010 E3.S, a data center eSSD based on 176-layer 4D NAND; the PEB110 E1.S built on 238-layer 4D NAND; the PQC21, SK hynix’s first QLC6 SSD for PCs; and the PS1101 E3.L, a newly introduced data center eSSD with a capacity of 245 TB — the highest in the industry.

6Quad-level cell (QLC): NAND flash is categorized as single-level cell (SLC), multi-level cell (MLC), triple-level cell (TLC), QLC, and penta-level cell (PLC) depending on how many data bits can be stored in one cell. As the amount of information storage increases, more data can be stored in the same volume.

At FMS 2025, SK hynix emphasized its technological capabilities and competitive edge with live product demonstrations and showcases. One demonstration involved the Intel Xeon 6 processor equipped with SK hynix’s CMM-DDR5, which delivers a maximum of 50% greater capacity and up to 30% more memory bandwidth in basic server systems. The demo highlighted not only CMM-DDR5’s practical applicability in real-world operations but also its system scalability, emphasizing its superior performance and cost efficiency over traditional standalone memory configurations.

OASIS, based on data-aware CSD, is capable of independent analysis and detection

SK hynix is currently engaged in a joint research project with Los Alamos National Laboratory7 (LANL) to develop next-generation storage technologies that improve data analysis performance in high-performance computing (HPC) environments. During the demonstration, SK hynix showcased its OASIS computational storage, based on data-aware CSD8, which can perform independent data analysis and detection. Using real HPC workloads to show improved data analysis performance in real-time, the presentation captivated numerous attendees.

7Los Alamos National Laboratory (LANL): A national research and development center under the U.S. Department of Energy, conducting a wide range of research in areas such as national security, nuclear fusion, and space exploration. It is especially known for conducting scientific research during World War II for the Manhattan Project, an allied effort that developed the world’s first atomic weapons.

8Computational storage drive (CSD): A storage device that performs computations directly on the data is stores.

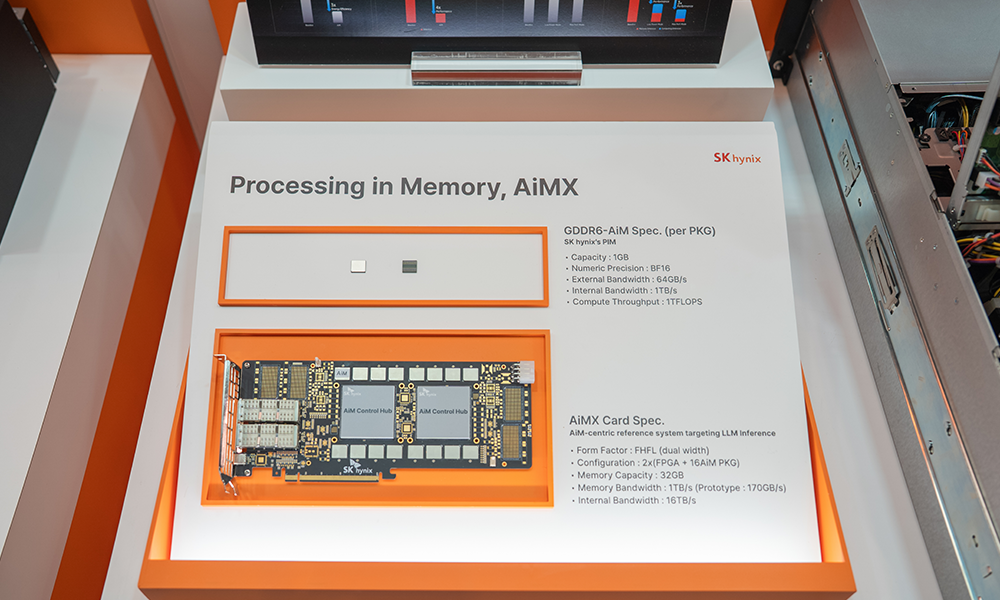

The PIM-based GDDR6-AiM and AiMX products deliver accelerated computational speeds

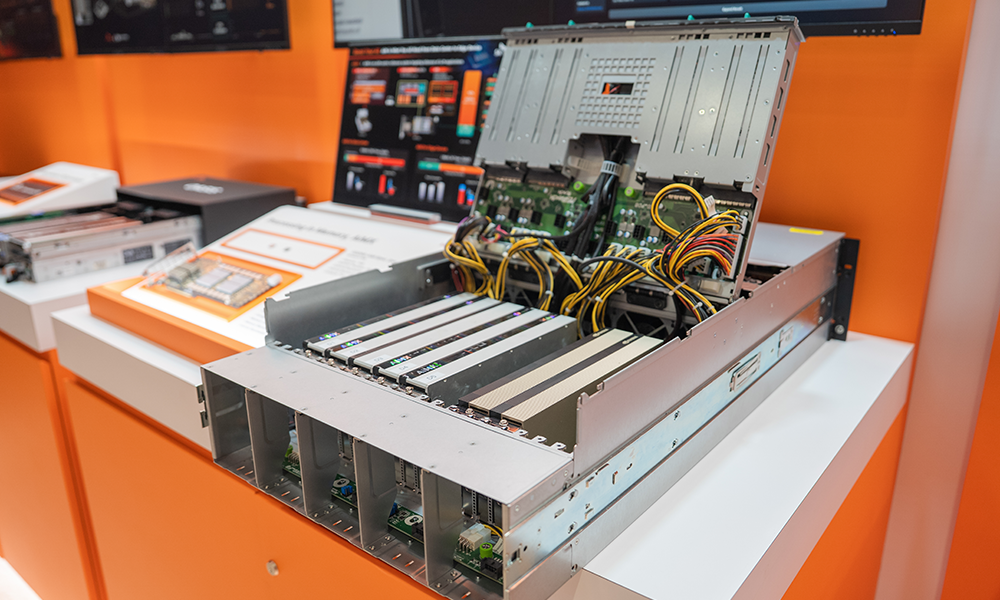

Another demonstration featured PIM9 products such as GDDR6-AiM. This product adds computational functions to GDDR6, which offers a data processing speed of 16 gigabits per second (Gbps). When paired with a CPU or GPU, GDDR6-AiM can make computations up to 16 times faster under specific conditions. Another demonstrated product that can accelerate computational speeds is AiMX10, a high-performance accelerator card capable of efficiently handling large-scale data with low power consumption.

9Processing-In-Memory (PIM): A next-generation memory technology that integrates computational capabilities into memory, addressing data movement bottlenecks in AI and big data processing.

10AiM-based Accelerator (AiMX): An advanced accelerator card from SK hynix, powered by GDDR6-AiM chips, and purpose-built for LLMs — AI systems such as ChatGPT trained on vast text datasets.

Finally, the memory-centric AI machine demonstration featured a distributed LLM inference system connecting multiple servers and GPUs using pooled memory11. The demonstration showed how leveraging CXL pooled memory-based data communication can significantly enhance the performance of LLM inference systems compared to systems using network communication.

11Pooled memory: A technology that groups multiple CXL memory units into a shared pool, allowing multiple hosts to efficiently allocate and share memory capacity. This approach significantly improves overall memory utilization.

Keynote Speech: Highlighting Leadership in Next-Gen AI Infrastructure

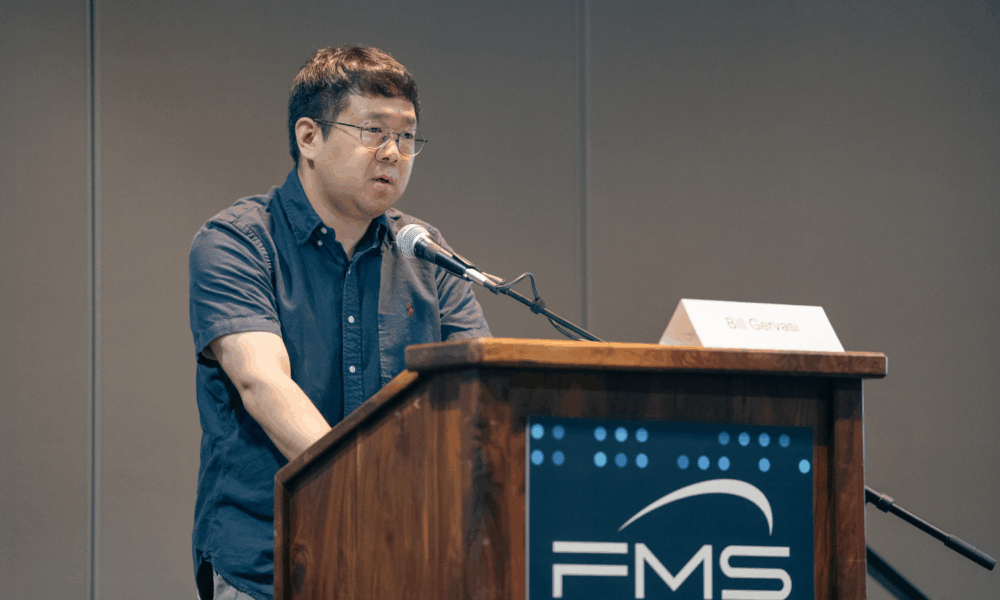

SK hynix employees were key speakers at FMS 2025, delivering a keynote speech, joining an executive AI panel hosted by NVIDIA, and giving a total of 13 presentations. By sharing its industry knowledge through these sessions, the company demonstrated its position as a global leader driving the next-generation AI memory market.

Vice President Chunsung Kim of eSSD Product Development and Vice President Joonyong Choi of HBM Business Planning jointly delivered the keynote address “Where AI Begins: Full Stack Memory Redefining the Future.” During the presentation, Kim emphasized the importance of the evolution of memory technology as the AI industry transitions from training to inference. He added that SK hynix’s storage solutions are designed to provide fast and reliable access to data-intensive workloads in AI inference scenarios.

Meanwhile, Choi noted that HBM can meet diverse customer needs as it offers the structural advantages of high bandwidth with low-power consumption. He added that SK hynix will deliver a comprehensive memory portfolio optimized for various AI environments to meet the two critical factors shaping the scalability and TCO12 of AI systems — performance and power efficiency.

12Total cost of ownership (TCO): The complete cost of acquiring, operating, and maintaining an asset, including purchase, energy, and maintenance expenses.

During the three-day event, SK hynix representatives delivered insightful presentations on key topics driving the next-generation AI market. Topics included memory technology and scalable infrastructure; non-volatile and high-efficiency memory advancements; AI infrastructure and LLM training optimization; data analytics and governance; and AI-driven talent management.

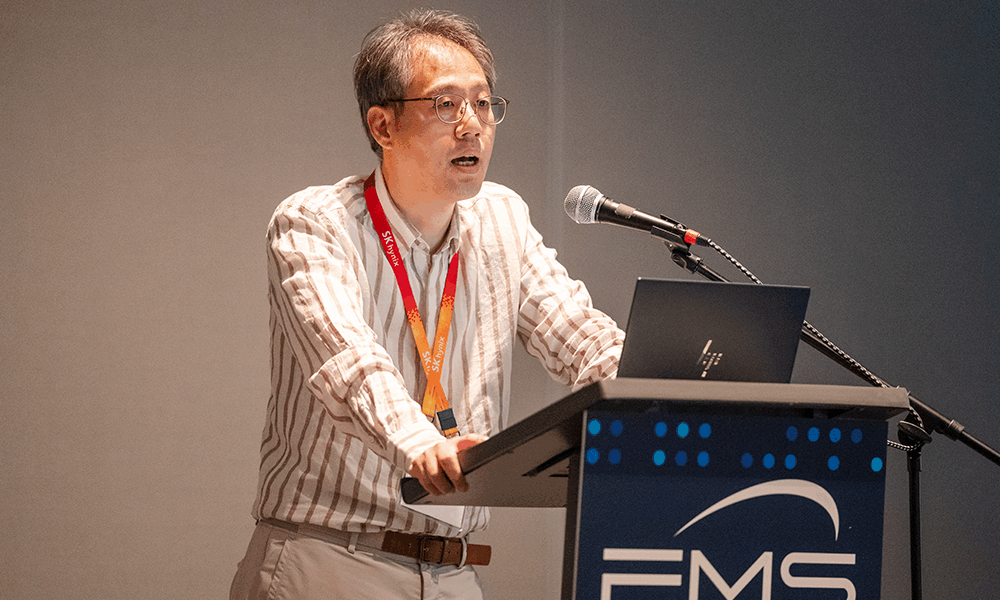

Sunguk Kang, head of SK hynix America’s DRAM Technology, participating in the NVIDIA executive AI panel

Vice President Sunguk Kang, head of SK hynix America’s DRAM Technology, joined the executive AI panel discussion “Memory and Storage Scaling for AI Inferencing,” hosted and moderated by NVIDIA. During the panel, he provided in-depth commentary on AI technology trends and strategies for cross-industry collaboration. This included his thoughts that, while raw bandwidth is critical for AI training, AI inferencing requires intelligent memory and storage solutions that surpass it. Kang also emphasized that ultra-high-performance networking and smart memory technologies can significantly increase AI inferencing throughput.

At FMS 2025, SK hynix underlined its exceptional technological expertise in memory and storage through a diverse array of innovative products. To address the evolving needs of today’s AI-driven society, the company is committed to ongoing investment in research and development, further strengthening its AI memory leadership across both DRAM and NAND technologies.