When IBM-developed computer Watson beat out its human competitors on the quiz show Jeopardy in 2011, it was thought to be the beginning of the end of the superior reign of human intelligence. Watson brought discussions of AI to the mainstream. Its ability to apply machine learning to gather and analyze massive amounts of data in a flash was something most thought exclusive to sci-fi.

Quintillions of bytes of data are now being generated each day, with the amount of data generated by 2025 predicted to be 181 zettabytes. While this volume of data exceeds far beyond the realm of human consumption, cloud computing, faster processing, faster networks, and faster chips mean it can be processed and applied efficiently. AI isn’t a pipe dream – it’s a reality.

From Synapses to Circuits

Semiconductors supporting AI functions must capitalize on space and provide means for parallel processing for complex tasks. Enter, Processing in Memory chips. The so-called PIM chip integrates a processor with Random Access Memory (RAM) on a single memory module. This structure removes the boundary between memory and system semiconductors, allowing data storage and data processing to happen in the same place.

By eliminating the need for data to traverse modules, response times are greatly improved, allowing for real-time data processing. More traditional computer architectures, which manage processing and storage in separate modules, often fall prey to latency issues, commonly referred to as the von Neumann bottleneck. Adding processing functions to memory semiconductors presents a unique solution to overcome this long-standing problem.

SK hynix unveiled its next-generation PIM in February 2022 at ISSCC in San Francisco. The GDDR6-AiM (Accelerator in Memory) adds computational functions to GDDR6 memory chips, allowing for data to be processed at speeds of up to 16 Gbps.

GDDR6-AiM is also more energy efficient, reducing power consumption by 80% by removing data movement to the CPU and GPU. Advancing technology in a manner that supports a greener and more equitable world is an integral part of SK hynix future vision. GDDR6-AiM can help reduce carbon emissions and shrink the carbon footprint of any technology it’s applied to, advancing SK hynix’s ESG-related goals and expanding their positive impact across their clients’ industries.

While particularly effective in managing the needs of AI-based systems, PIM can be applied to a broad spectrum of technologies. Databases, query engines, data grids, and more all require some version of data storage and processing coupled with custom applications leveraging a variety of inputs.

The next generation of smart memory

Machine Learning vs. Deep Learning

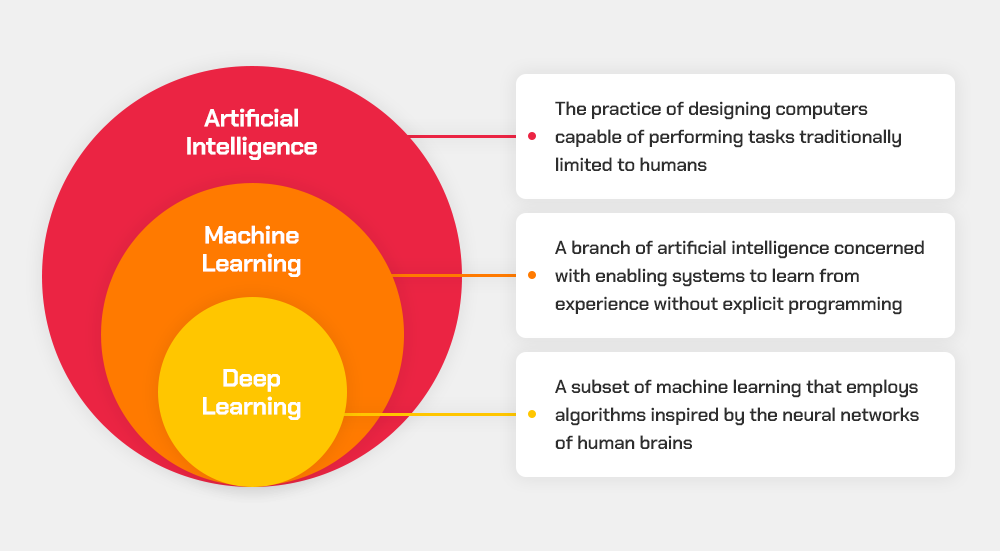

Unbeknownst to many, artificial intelligence is a broad term that describes the science of creating machines that think like humans. The term machine learning marks functionalities that enable computers to perform tasks without explicit programming and includes deep learning, a subset that relies on artificial neural networks.

Deep learning can be seen as the most independent AI system as it manages both feature input and classification. These systems also require vast amounts of data and rely on parallel processes as their algorithms are primarily self-directed once trained.

AI machines, including deep learning models, are already a part of our lives. There are countless real-world AI applications, which only stand to increase. Everything from mobile devices to autonomous vehicles utilize AI models for tasks like location-based recommendation, auto-braking, camera-based object classification, and navigation through complex environments.

The art of computationally mimicking human intelligence takes many forms

Overcoming the Challenges

The road to PIM development was not without detours, roadblocks, and congestion. As the technology continues to advance, there are still obstacles to surmount across design, manufacturing, cost, and more.

Designing PIM requires the application of novel approaches to chip structures. Traditional semiconductors do not need to accommodate near-memory queues or perform parallel functions in the same way PIM chips do. Once onto the manufacturing stage, space and distance considerations become paramount. It is crucial to reduce how far signals must travel without increased cost or risk of thermal issues.

Furthermore, integrated chips such as PIM have an increased dependency on memory – a unique feature that is both a blessing and a curse. Any damage to the memory components could result in compromised data.

With the AI market expected to reach $190 billion by 2025, investment in AI is ripe. According to a Boston Consulting Group and MIT Sloan Management Review study, 83% of businesses say AI is a strategic priority. SK hynix will continue to advance its expertise in the area and lead this growing sector in the years to come.