SK hynix is showcasing its advanced memory solutions for AI and high-performance computing (HPC) at Supercomputing 2024 (SC24) in Atlanta, the U.S., held from November 17–22. This annual event, organized by the Association for Computing Machinery and the IEEE Computer Society since 1988, features the latest developments in HPC, networking, storage, and data analysis.

SK hynix’s booth at SC24

Returning for its second year, SK hynix is underlining its AI memory leadership through a display of innovative memory products and insightful presentations on AI and HPC technologies. In line with the conference’s “HPC Creates” theme which underscores the impact of supercomputing across various industries, the company is showing how its memory solutions drive progress in diverse fields.

Showcasing Advanced Memory Solutions for AI & HPC

At the booth, SK hynix is demonstrating and displaying a range of groundbreaking products tailored for AI and HPC. The products being demonstrated include its CMM (CXL®1 Memory Module)-DDR52, AiMX3 accelerator card, and Niagara 2.0 among others.

1Compute Express Link® (CXL®): A PCIe-based next-generation interconnect protocol on which high-performance computing systems are based.

2CXL Memory Module-DDR5 (CMM-DDR5): A next-generation DDR5 memory module utilizing CXL technology to boost bandwidth and performance for AI, cloud, and high-performance computing.

3Accelerator-in-Memory Based Accelerator (AiMX): SK hynix’s specialized accelerator card tailored for large language model processing using GDDR6-AiM chips.

Live demonstrations of CMM-DDR5 and AiMX at the booth

The live demonstration of CMM-DDR5 with a server platform featuring Intel® Xeon® 6 processors shows how CXL® memory technology accelerates AI workloads under various usage models. Moreover, visitors to the booth can learn about the latest CMM-DDR5 product with EDSFF4 which offers improvements in TCO5 and performance. Another live demonstration features AiMX integrated in an ASRock Rack Server to run Meta’s Llama 3 70B, a large language model (LLM) with 70 billion parameters. This demonstration highlights AiMX’s efficiency in processing large datasets while achieving high performance and low power consumption, addressing the computational load challenges posed by attention layers6 in LLMs.

4Enterprise and Data Center Standard Form Factor (EDSFF): A collection of SSD form factors specifically used for data center servers.

5Total cost of ownership (TCO): The complete cost of acquiring, operating, and maintaining an asset, including purchase, energy, and maintenance expenses.

6Attention layer: A mechanism that enables a model to assess the relevance of input data, prioritizing more important information for processing.

SK hynix’s Niagara 2.0, customized HBM, OCS, and SSD products

Among the other technologies being demonstrated is Niagara 2.0. The CXL pooled memory solution enables data sharing to minimize GPU memory shortages during AI inference7, making it ideal for LLM models. The company is also demonstrating an HBM with near-memory processing (NMP)8 which accelerates indirect memory access9, a frequent occurrence in HPC. Developed with Los Alamos National Laboratory (LANL), the solution highlights the potential of NMP-enabled HBM to advance next-generation technologies.

Another demonstration is showcasing SK hynix’s updated OCS10 solution, which offers significant improvements in analytical performance for real-world HPC workloads compared to the iteration displayed at SC23. Co-developed with LANL, OCS addresses performance issues in traditional HPC systems by enabling storage to independently analyze data, reducing unnecessary data movement and improving resource efficiency. Additionally, the company is demonstrating a checkpoint offloading SSD11 prototype that improves LLM training resource utilization by enhancing performance and scalability.

7AI inference: The process of using a trained AI model to analyze live data for predictions or task completions.

8Near-memory processing (NMP): A technique that performs computations near data storage, reducing latency and boosting performance in high-bandwidth tasks like AI and HPC.

9Indirect memory access: A computing addressing method in which an instruction providing the address of a memory location that contains the actual address of the desired data or instruction.

10Object-based computational storage (OCS): A storage architecture that integrates computation within the storage system, enabling local data processing and minimizing movement to enhance analytical efficiency.

11Checkpoint offloading SSD: A storage solution that stores intermediate data during AI training, improving efficiency and reducing training time.

SK hynix presented various data center solutions, including HBM3E, DDR5, and eSSD products

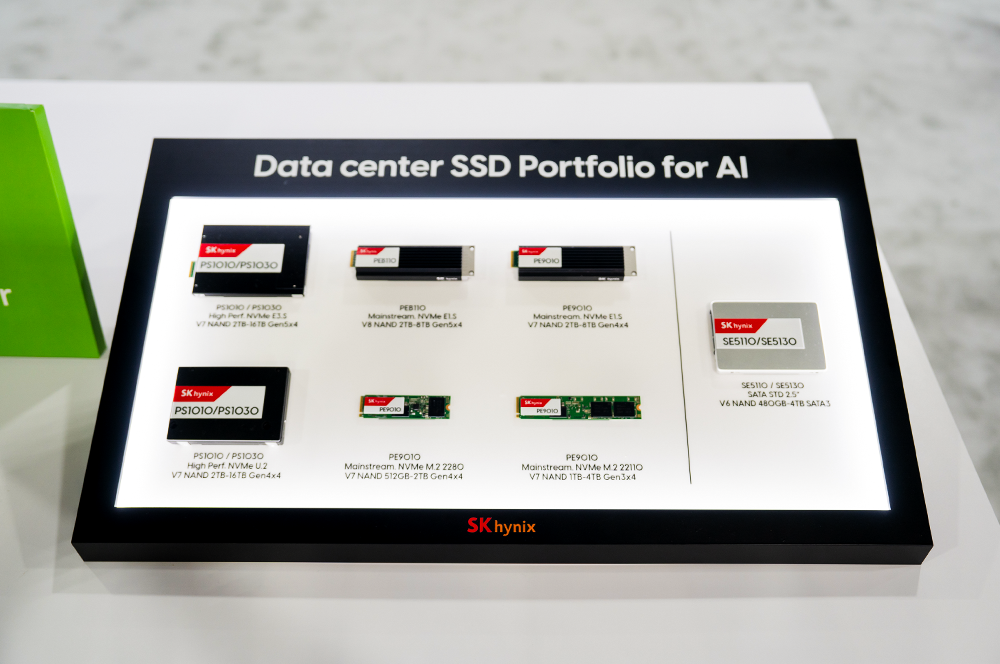

In addition to running product demonstrations, SK hynix is also displaying a robust lineup of data center solutions, including its industry-leading HBM3E12. The fifth-generation HBM provides high-speed data processing, optimal heat dissipation, and high capacity, making it essential for AI applications. Alongside HBM3E are the company’s rapid DDR5 RDIMM and MCR DIMM products, which are tailored for AI computing in high-performance servers. Enterprise SSDs (eSSDs) including the Gen 5 PS1010 and PEB110 are also on display. Offering ultra-fast read/write speeds, these SSD solutions are vital for accelerating AI training and inference in large-scale environments.

12HBM3E: The fifth-generation High Bandwidth Memory (HBM), a high-value, high-performance product that revolutionizes data processing speeds by connecting multiple DRAM chips with through-silicon via (TSV).

Highlighting the Potential of Memory Through Expert Presentations

During the conference, Jongryool Kim, research director of AI System Infra, presented on “Memory & Storage: The Power of HPC/AI,” highlighting the memory needs for HPC and AI systems. He focused on two key advancements including near-data processing technology using CXL, HBM, and SSDs to improve performance, and CXL pooled memory for better data sharing across systems.

(From first image) Research Director Jongryool Kim presenting on advancements in memory and storage for HPC and AI systems; Technical Leader Jeoungahn Park delivering a presentation on OCS

Technical Leader Jeoungahn Park of the Sustainable Computing team also took to the stage for his talk on “Leveraging Open Standardized OCS to Boost HPC Data Analytics.” Park explained how OCS enables storage to automatically recognize and analyze data, thereby accelerating data analysis in HPC. He added how OCS enhances resource efficiency and integrates seamlessly with existing analytics systems, as well as how its analysis performance has been verified in real-world HPC applications.

At SC24, SK hynix is solidifying its status as a pioneer in memory solutions which are driving innovations in AI and HPC technologies. Looking ahead, the company will continue to push technological boundaries with support from its partners to shape the future of AI and HPC.