SK hynix shared its next-generation AI memory strategy and roadmap at the SK AI Summit 2025 held in Seoul from November 3–4. At the summit held under the theme “AI Now & Next,” the company elevated its vision from becoming a full-stack AI memory provider to embracing a broader role as a full-stack AI memory “creator.”

Hosted annually by SK Group, the event was formerly known as the SK Tech Summit but has evolved with a greater focus on AI to become the SK AI Summit since 2024. This year’s event brought together global AI experts and industry professionals to discuss the present and future of AI technologies, as well as its applications across industries.

Among those in attendance were SK Group Chairman Chey Tae-won, SK hynix CEO Kwak Noh-Jung, and SK Telecom CEO Jung Jaihun. They were joined by influential tech leaders including OpenAI CEO Sam Altman and Amazon CEO Andy Jassy, who delivered keynote speeches via video. Furthermore, global tech giants including NVIDIA, Amazon Web Services (AWS), Google, TSMC, and Meta, together with numerous domestic members of the K-AI Alliance1, participated to discuss collaboration opportunities across the AI ecosystem.

1K-AI Alliance: Established by SK Telecom, the K-AI Alliance consists of Korean tech companies which aim to lead the development and global expansion of the Korean AI industry.

The event featured keynote speeches, presentation sessions, and exhibitions, serving as a forum to explore the present and future of AI technology from multiple perspectives. As a leading innovator in AI infrastructure, SK hynix highlighted its technological leadership by presenting a memory technology roadmap for the AI expansion era and outlining its vision for collaboration across the broader industry.

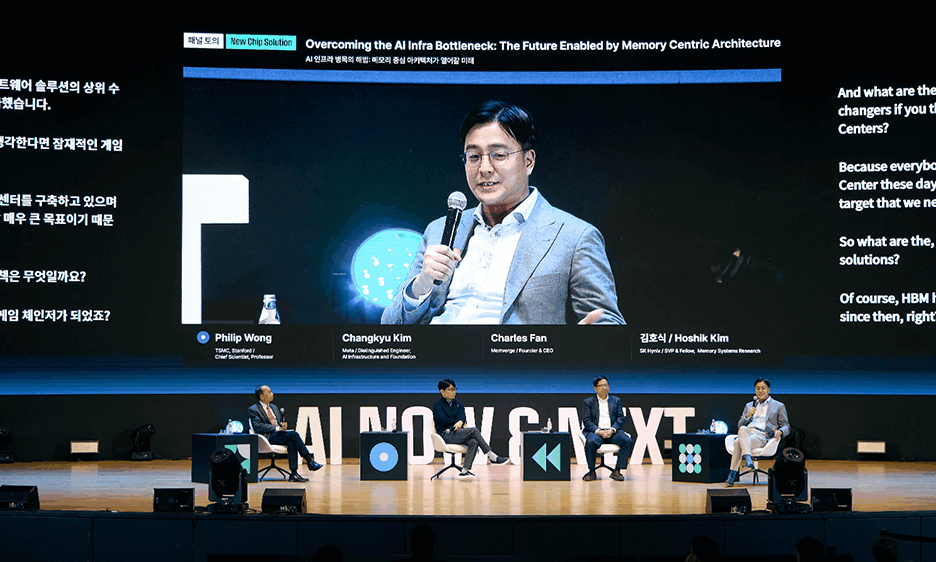

SK hynix CEO Kwak drew significant attention with a keynote speech on the evolving role and development path of the memory industry amid accelerating AI adoption. In addition, Senior Vice President Hoshik Kim of Memory System Research joined a keynote panel session to discuss solutions to AI infrastructure bottlenecks and the importance of memory-centric architectures. In sessions organized by SK hynix, Vice President Kyoung Park of Biz. Insight, Vice President Youngpyo Joo of System Architecture, and Professor Kyusang Lee of the University of Virginia shared insights on next-generation AI memory technologies, reinforcing the company’s technological leadership.

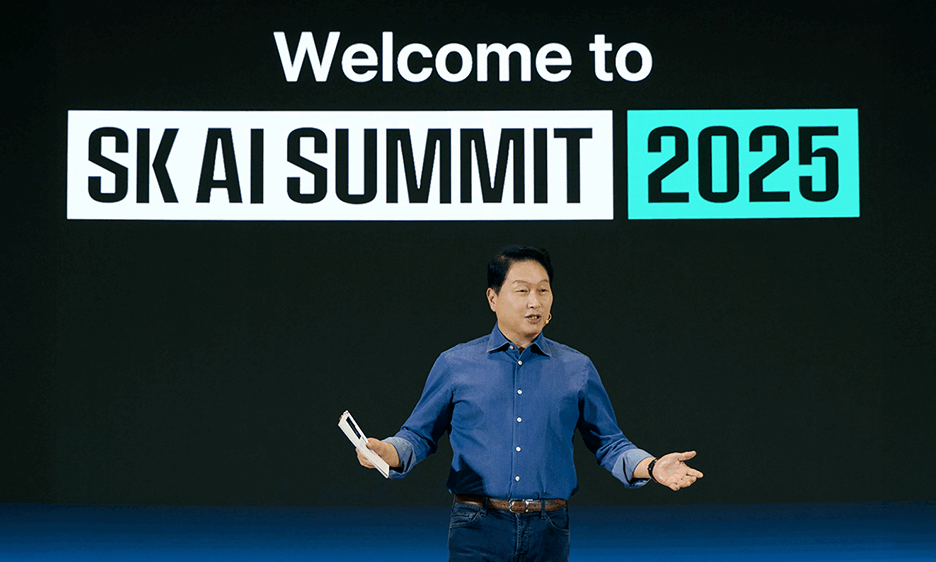

SK Group Chairman Chey Tae-won presents the opening keynote at the SK AI Summit 2025

SK Group Chairman Chey delivered the opening keynote under the theme “AI Now & Next,” setting the discussion around the next stage of the AI era. “The AI industry is now shifting from a race for scale to a race for efficiency,” he said, adding that SK’s role in responding to surging AI demand is to improve the efficiency of AI solutions. Chey emphasized: “The core of SK’s AI strategy is working together with partners — Big Tech, governments, startups — to co-design and deliver the most efficient AI solutions. SK does not compete with its partners but creates AI business opportunities with them to find the most efficient AI solutions.”

(From first image) OpenAI CEO Sam Altman and Amazon CEO Andy Jassy delivering keynote addresses via video

CEO Kwak Presents Memory Vision for Expanding the AI Ecosystem

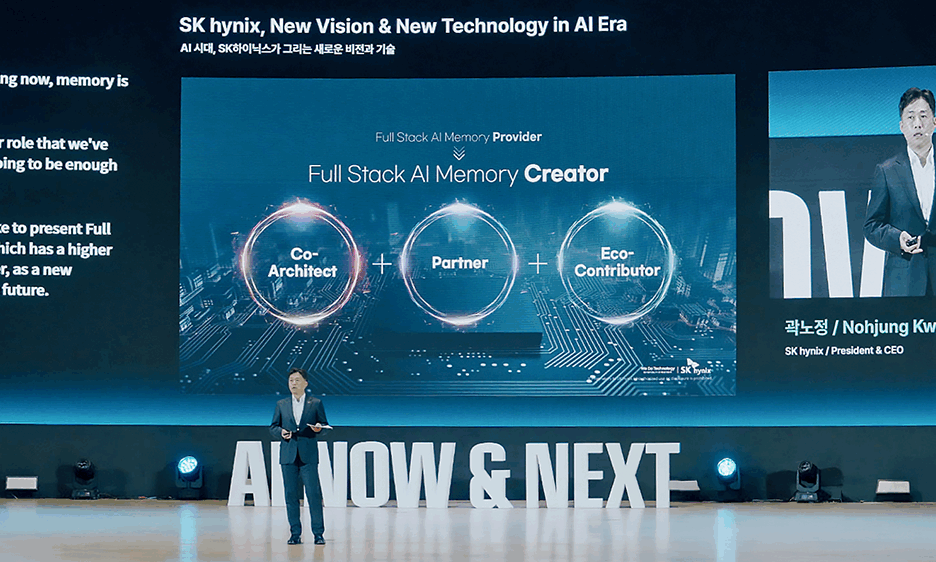

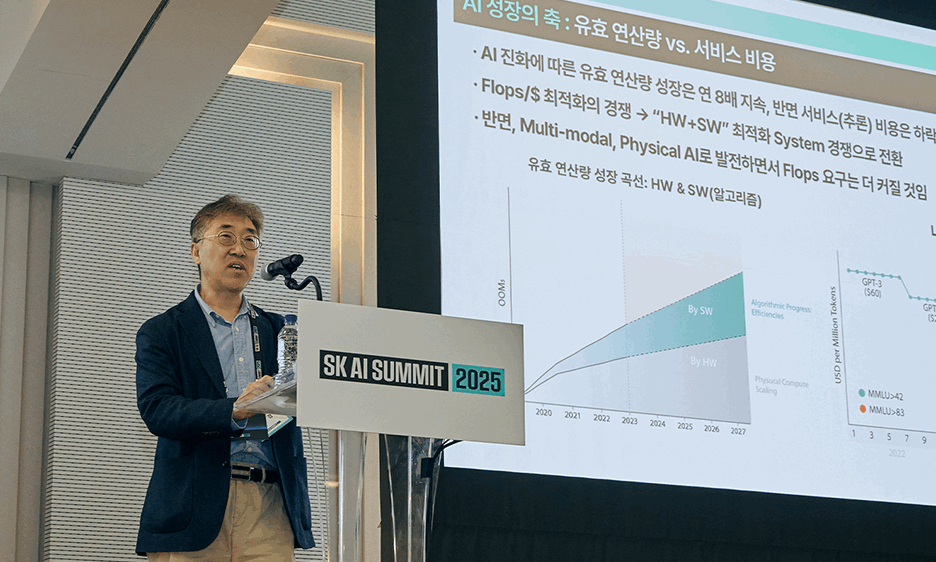

On the first day of the summit, SK hynix CEO Kwak delivered a keynote speech titled “SK hynix, New Vision & New Technology in the AI Era.” Building on the vision presented last year, he refined it to meet the demands of the expanding AI era, providing more details on the directions for technological innovation and ecosystem collaboration.

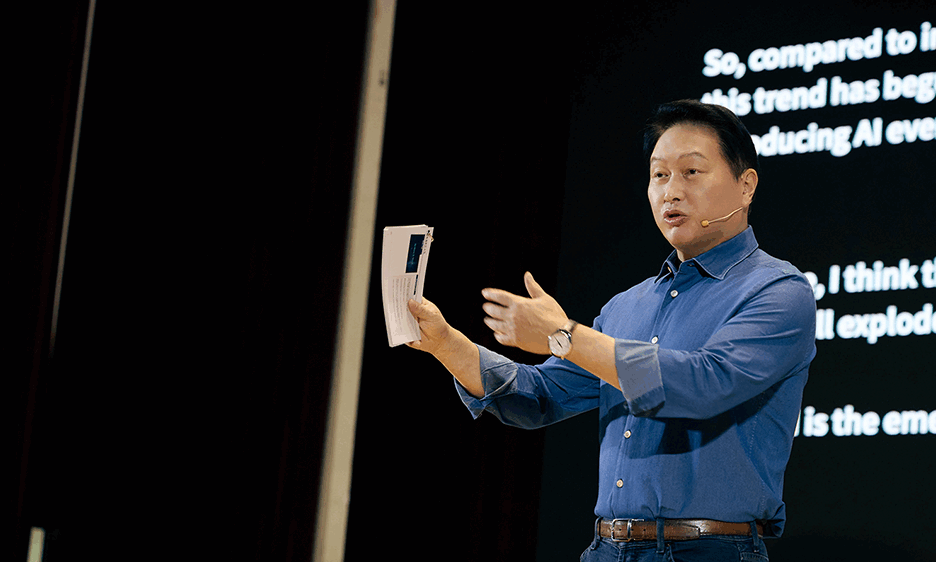

SK hynix CEO Kwak Noh-Jung delivering a keynote speech at the summit

“As the expansion of AI drives greater data movement, it has become crucial to respond by enhancing the performance of hardware,” Kwak stated. “However, the memory wall2 has become a major obstacle to technological progress, as memory speeds fail to keep pace with advances in processors. Memory is no longer a simple component — it is becoming a key value product at the core of the AI industry. The issue is that the market’s demands can no longer be met by the same level of performance improvements as before.”

2Memory wall: A bottleneck that occurs when memory access speed lags behind data processor speed.

Kwak then outlined the company’s redefined vision. “Until now, SK hynix has been on the path to become a full-stack AI memory provider that supplies the products our customers need at the right time,” he said. “Going forward, we aim to evolve into a full-stack AI memory ‘creator’ that solves customers’ problems and collaborates with the ecosystem to create greater value together. As a co-architect, partner and contributor to the ecosystem, SK hynix will design the future of AI infrastructure.”

He then unveiled SK hynix’s full-stack AI memory strategy featuring three pillars: custom HBM3, AI DRAM (AI-D) and AI NAND (AI-N). First, custom HBM is designed to maximize performance by transferring certain compute functions, previously handled by GPUs and ASICs4, to the HBM’s base die. This approach addresses evolving AI market demands, which are shifting from general-purpose performance to inference efficiency and total cost of ownership (TCO) optimization. Overall, custom HBM will enhance total system efficiency by improving GPU and ASIC compute efficiency, and reducing power consumption for data transfers.

3High Bandwidth Memory (HBM): A high-value, high-performance memory product which vertically interconnects multiple DRAM chips and dramatically increases data processing speed in comparison to conventional DRAM products. There are six generations of HBM, starting with the original HBM followed by HBM2, HBM2E, HBM3, HBM3E, and HBM4.

4Application-specific Integrated Circuit (ASIC): Also known as a custom semiconductor, an ASIC is an integrated circuit designed for a specific purpose.

Meanwhile, AI-D is a segmented DRAM product lineup optimized to meet the demands of the AI era beyond conventional general-purpose DRAM. SK hynix is developing:

- AI-D O (Optimization): Low-power, high-performance DRAM that supports TCO reduction and operational efficiency for data centers. Example products include MRDIMM, SOCAMM2, and LPDDR5R.

- AI-D B (Breakthrough): Ultra-high-capacity, highly flexible memory solutions designed to overcome the memory wall, such as CXL Memory Module (CMM) and Processing-in-Memory (PIM).

- AI-D E (Expansion): DRAM solutions such as HBM that have applications beyond data centers to fields including robotics, mobility and industrial automation.

Finally, AI-N is a next-generation storage solution lineup that combines high performance, high bandwidth and ultra-high capacity for AI systems. SK hynix is advancing its related NAND technology in three strategic directions, significantly enhancing its competitiveness in AI-targeted storage solutions:

- AI-N P (Performance): Ultra-high-performance SSDs that significantly boost input/output operations per second (IOPS) in large-scale AI inference environments through smaller chunk sizes. They can minimize bottlenecks between AI compute and storage, enhancing processing speed and energy efficiency. SK hynix is redesigning NAND and controllers under a new architecture and plans to release samples by the end of 2026.

- AI-N B (Bandwidth): High-capacity and cost-effective solutions that widen bandwidth by applying HBM-like stacked structures to NAND.

- AI-N D (Density): High-capacity solutions focused on storing large datasets with low power and minimal cost, suitable for AI data storage. These QLC5-based solutions aim to achieve petabyte capacity, combining the speed of SSDs with the economy of HDDs.

5Quad-level cell (QLC): A type of memory cell used in NAND flash that stores four bits of data in a single cell. NAND flash is categorized as single-level cell (SLC), multi-level cell (MLC), triple-level cell (TLC), QLC, and penta-level cell (PLC) depending on how many data bits can be stored in one cell.

Wrapping up his keynote, Kwak added: “SK hynix will continue to expand its role as a leading AI memory company by forming ‘one-team’ collaborations with global players across the AI industry to drive the next generation of AI.”

Discussing the Future of AI Infrastructure: Sharing Memory-Centric Tech Insights

(From first image) Hoshik Kim of Memory System Research; Kyoung Park of Biz. Insight; Youngpyo Joo of System Architecture; Professor Kyusang Lee of the University of Virginia

Hoshik Kim, Senior Vice President of Memory System Research at SK hynix, participated in a keynote panel session titled “Overcoming the AI Infra Bottleneck: The Future Enabled by Memory-Centric Architecture.” He was joined by David A. Patterson, professor emeritus at the University of California, Berkeley; Philip Wong, professor at Stanford University; Changkyu Kim, distinguished engineer at Meta; and Charles Fan, co-founder and CEO of MemVerge. Vice President Kim discussed the need for memory-centric architectures to scale AI compute performance and explored ways to establish a cooperative ecosystem across the industry.

Vice President Kyoung Park of Biz. Insight at SK hynix delivered a session titled “The Evolution of AI Service Infrastructure and the Role of Memory.” Park noted that AI services are rapidly diversifying, ranging from cloud-based inference on massive models to lightweight models running on edge servers and devices. He emphasized that the role of memory is evolving beyond its traditional role as storage, emerging as a key resource that determines the performance of AI systems. Park also stated that memory technologies will become a critical driver of innovation in AI infrastructure.

Vice President Youngpyo Joo of System Architecture at SK hynix presented on “The Need and Direction of Collaboration With System-Level Partners From the Memory Solution Provider’s Point of View.” He stressed that close collaboration with ASIC and system vendors is essential to implement memory structures optimized for each workload’s requirements. He also proposed an integrated approach that considers structural design, interfaces, power, and thermal characteristics.

Finally, Kyusang Lee, Professor at the University of Virginia, gave a talk titled “Evolution Into Next-Generation CPO-based Connectivity Technology for Implementing Massive Computational Networks.” He explained that current electrical interconnects face structural limitations in bandwidth and energy efficiency in large-scale AI computing networks and highlighted Co-Packaged Optics (CPO) as a key technology to overcome these limitations. He also pointed to micro-LED–based light sources as a potential alternative to address heat and wavelength variation issues associated with laser light sources.

Showcasing AI Memory Aligned With Key Presentations

At the SK Group joint booth operated under the concept of “The Next Depth,” SK hynix showcased a wide range of innovative technologies designed to maximize AI infrastructure performance and efficiency.

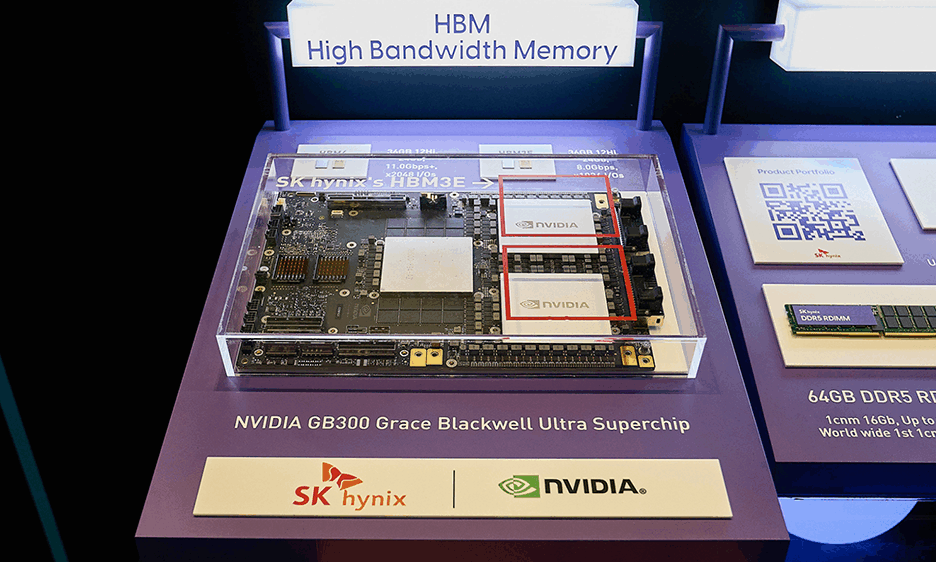

One of the highlights of the company’s display was its 12-layer HBM4, which offers the high bandwidth and power efficiency required for ultra-high-performance AI computing. The company also displayed NVIDIA’s next-generation GB300 Grace™ Blackwell Superchip equipped with SK hynix’s 36 GB HBM3E, the industry’s highest capacity HBM on the market.

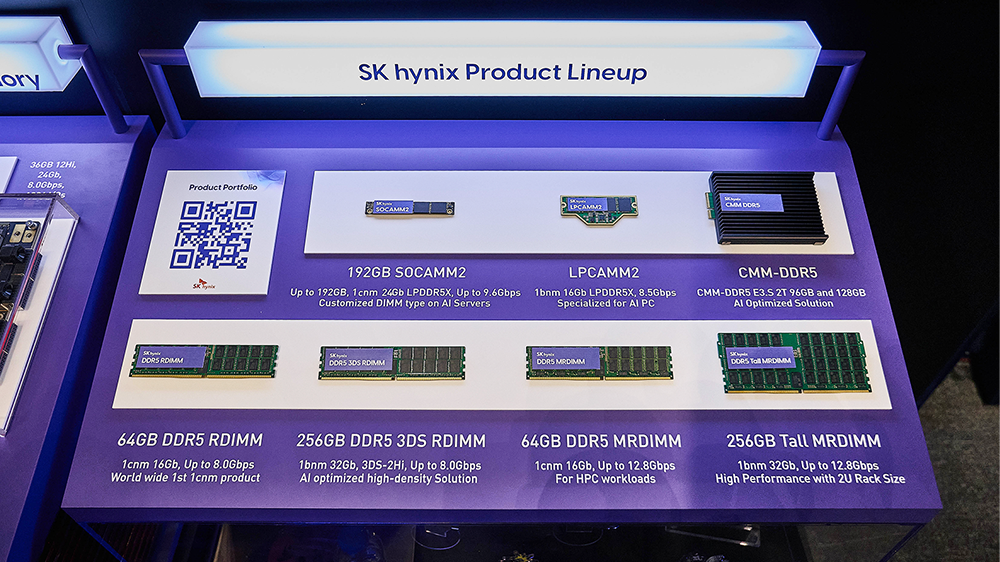

In the DRAM module section, SK hynix presented various next-generation products for AI including RDIMM6 and MRDIMM7 solutions which leverage the 1c8 node. The display also featured its 256 GB 3DS9 RDIMM, 256 GB Tall MRDIMM, SOCAMM210, LPCAMM211, and CXL12 Memory Module (CMM)–DDR5 solutions optimized for platforms ranging from PCs to AI servers.

6Registered Dual In-line Memory Module (RDIMM): A server memory module product in which multiple DRAM chips are mounted on a substrate.

7Multiplexed Rank Dual In-line Memory Module (MRDIMM): A server memory module product with enhanced speed by simultaneously operating two ranks — the basic operating units of the module.

81c: The 10 nm DRAM process technology has progressed through six generations: 1x, 1y, 1z, 1a, 1b, and 1c.

93D Stacked Memory (3DS): A high-performance memory solution in which two or more DRAM chips are packaged together and interconnected using TSV technology.

10Small Outline Compression Attached Memory Module (SOCAMM): A low-power DRAM-based memory module specialized for AI servers.

11Low Power Compression Attached Memory Module 2 (LPCAMM2): An LPDDR5X-based module solution that offers power efficiency and high performance as well as space savings. It delivers performance levels equivalent to two DDR5 SODIMMs.

12Compute Express Link (CXL): A next-generation solution designed to integrate CPU, GPU, memory and other key computing system components — enabling unparalleled efficiency for large-scale and ultra-high-speed computations. Applying CXL to existing memory modules can expand capacity by more than 10 times.

The memory-centric AI machine using the CXL pooled memory (Niagara 2.0) solution

SK hynix also introduced a distributed Large Language Model (LLM) inference system connecting multiple servers and GPUs using pooled memory13, highlighting a shift to memory-centric architectures. The demonstration showed how the performance of LLM inference systems could be enhanced by using CXL pooled memory-based data communication over traditional network methods.

13Pooled Memory: A technology that groups multiple CXL memory units into a shared pool, allowing multiple hosts to efficiently allocate and share memory capacity. This approach significantly improves overall memory utilization.

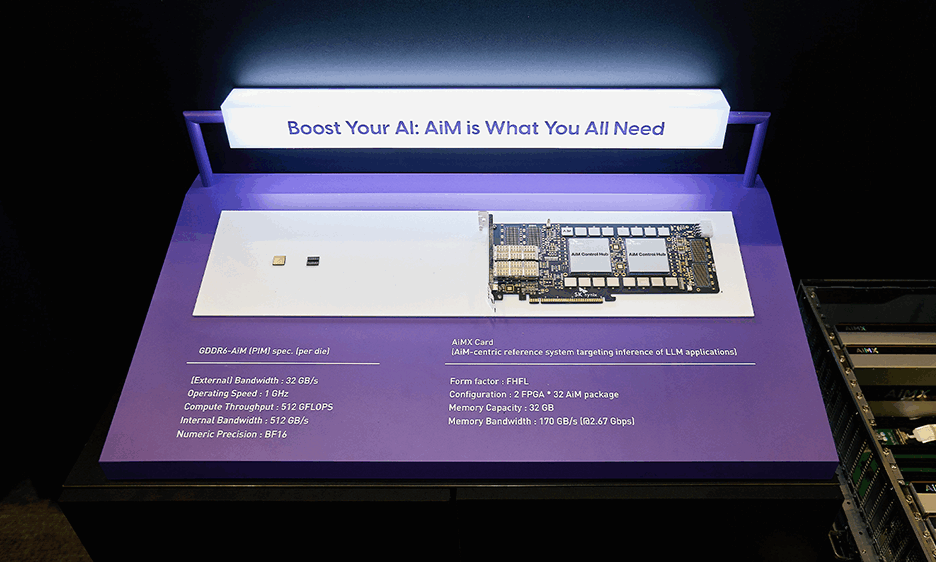

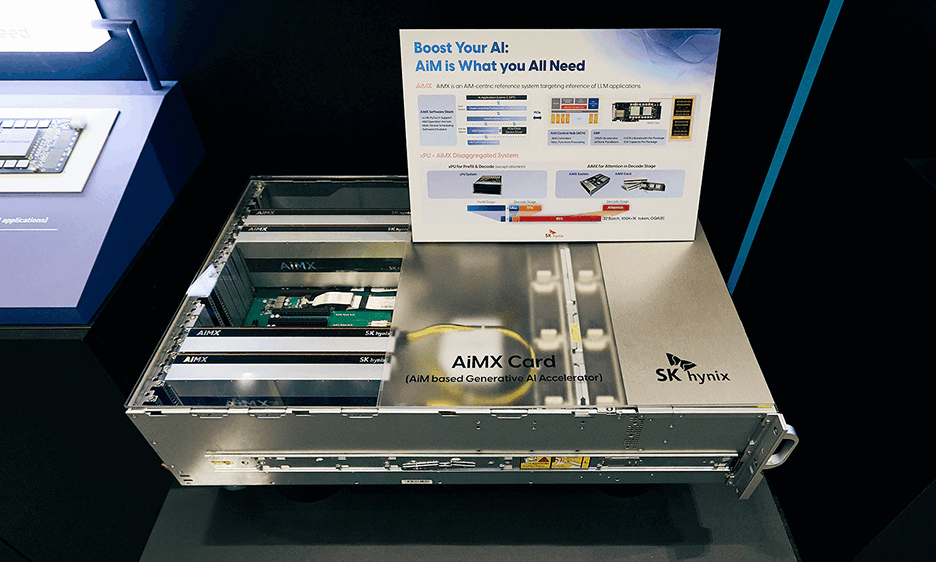

GDDR6-AiM and AiMX showcased by SK hynix

A separate demonstration highlighted how AiMX14 is optimized for attention15 operations — the core computation in LLMs. In a server equipped with AiMX cards and NVIDIA H100 GPUs, AiMX significantly enhanced the speed and efficiency of memory-bound16 workloads. AiMX also improves the efficiency of KV-cache17 utilization during long question-and-answer sequences, alleviating memory bottlenecks.

14AiM-based Accelerator (AiMX): SK hynix’s accelerator card featuring GDDR6-AiM chips which is specialized for large language models (LLMs) — AI systems such as ChatGPT trained on massive text datasets.

15Attention: An algorithmic technique that dynamically determines which parts of input data to focus on.

16Memory-bound: A situation in which system performance is limited not by the processor’s computational power but by memory access speed, causing delays while waiting for data access.

17Key-Value (KV) cache: A technology that stores and reuses previously computed Key and Value vectors, preserving context from earlier inputs to reduce redundant calculations and respond more efficiently.

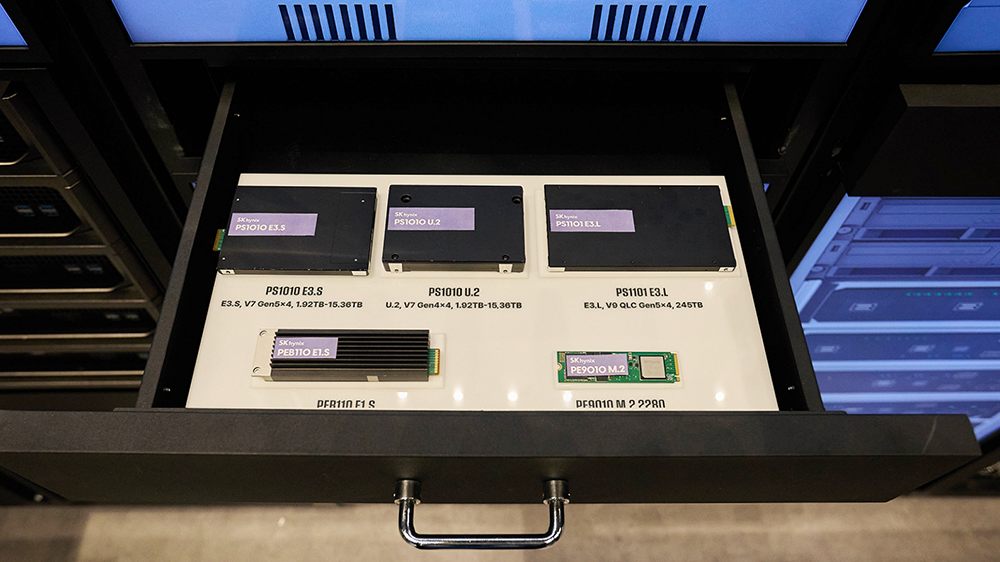

SK hynix also introduced its high-performance eSSD18 lineup including PS1010 E3.S, PEB110 E1.S, and PS1101 E3.L. Optimized for computing and storage servers, the eSSD portfolio enhances data processing efficiency in AI service infrastructures and storage utilization in large-scale computing environments.

18Enterprise Solid-State Drive (eSSD): An enterprise-grade SSD used in servers and data centers.

In the AIX zone, visitors could check out the wafer notch angle detection system. This system applies the YOLOv8-OBB deep learning model inside semiconductor chambers19 to detect wafer notches in real time with high precision. It drew attention for its ability to overcome the limitations of conventional sensor-based methods, prevent process defects in advance, and reduce equipment maintenance costs.

19Semiconductor chamber: A sealed environment within semiconductor manufacturing equipment where specific processes are performed. For example, in the etching process, the chamber serves as the space where semiconductor wafers are processed. Semiconductor manufacturing equipment typically consists of multiple chambers that perform various tasks throughout the production process.

At the SK AI Summit 2025, SK hynix unveiled a bold new vision built on collaboration and innovation which will take its AI memory to new heights. “SK hynix will pioneer the future of AI memory together with our customers, partners and the broader ecosystem,” said SK hynix CEO Kwak. “We place customer satisfaction as our highest priority and will generate greater synergy with our global partners to lead the next era of AI.”