SK hynix showcased advanced memory technologies for the era of AI and high-performance computing (HPC) at Supercomputing 2025 (SC25), held in St. Louis, the U.S., from November 16–21.

Held annually since 1988, SC is the world’s largest global HPC conference. It brings together experts from industry, academia, and research institutions to share the latest trends, create opportunities for collaboration, and discuss the future direction of technological advancement. At this year’s event, the convergence of AI and HPC emerged as a central topic.

Under the theme “Memory, Powering AI and Tomorrow,” SK hynix presented its innovative memory lineup set to lead the AI and HPC markets and shared a new technological vision to accelerate data analysis in computing systems.

Visitors exploring the SK hynix booth

Innovative Products and Tech Driving AI and HPC Performance

At the event, SK hynix presented core products including HBM1, DRAM, and eSSD2 solutions. Visitors to the booth could also see demonstrations of the products in AI and HPC environments, highlighting the company’s technological competitiveness.

1High Bandwidth Memory (HBM): A high-value, high-performance memory product which vertically interconnects multiple DRAM chips to increase capacity and dramatically boost data processing speeds. There are six generations of HBM, starting with the original HBM followed by HBM2, HBM2E, HBM3, HBM3E, and HBM4.

2Enterprise Solid-State Drive (eSSD): An enterprise-grade SSD used in servers and data centers.

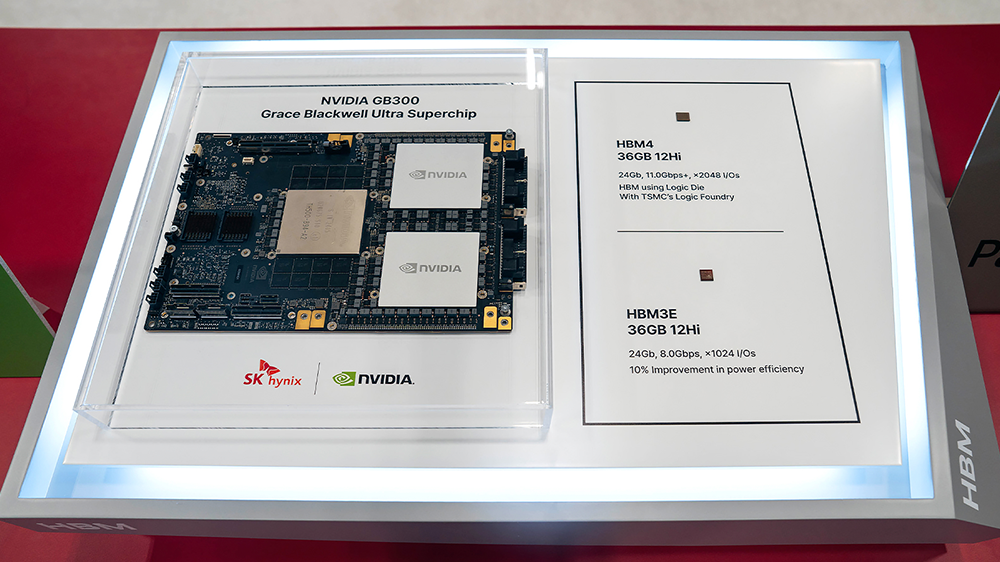

At the front of the booth, SK hynix displayed its latest HBM products including the 12-layer HBM4 which it developed as a world-first in September 2025. HBM4 features 2,048 input/output (I/O) channels, twice that of the previous generation, delivering a significant increase in bandwidth. Also offering more than a 40% boost in power efficiency, HBM4 is the most suitable solution for ultra-high-performance AI computing systems.

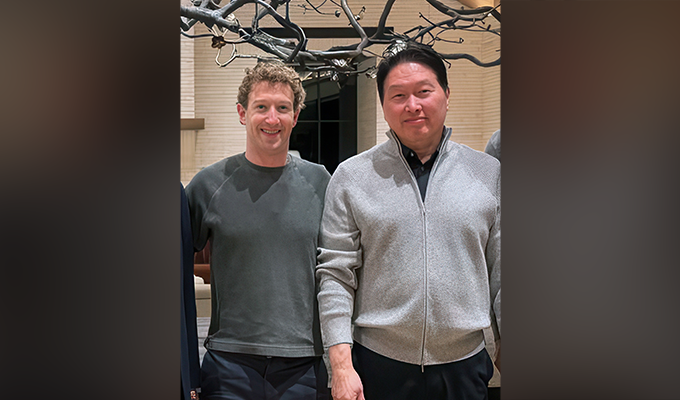

In addition, the 12-layer HBM3E, the highest performing commercialized HBM currently available on the market, was presented with NVIDIA’s next-generation GB300 Grace™ Blackwell GPU.

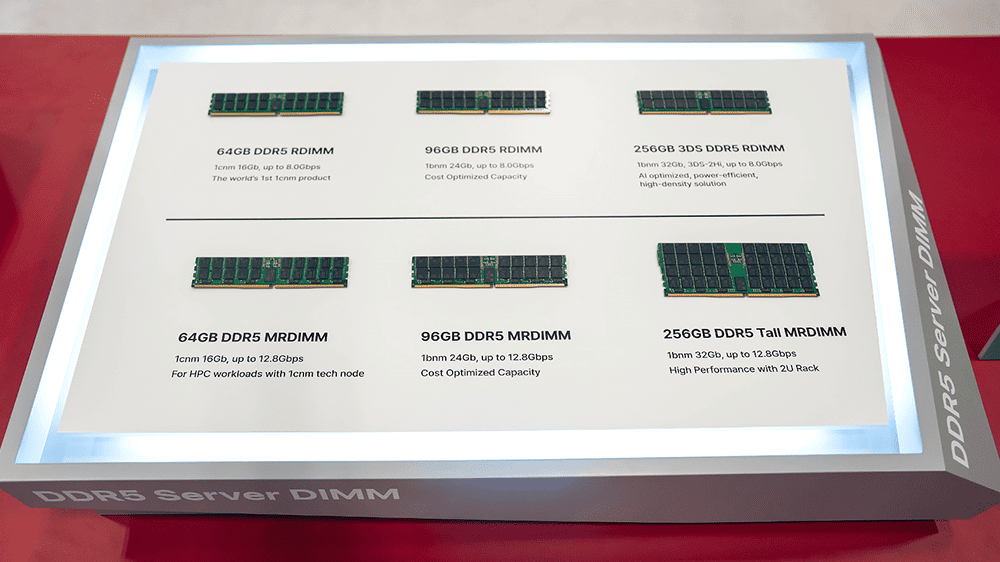

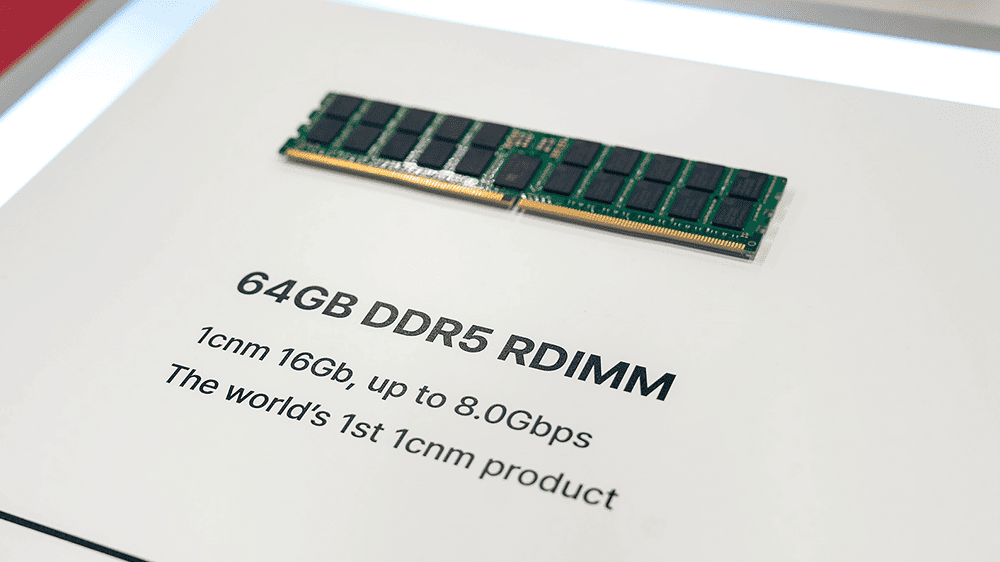

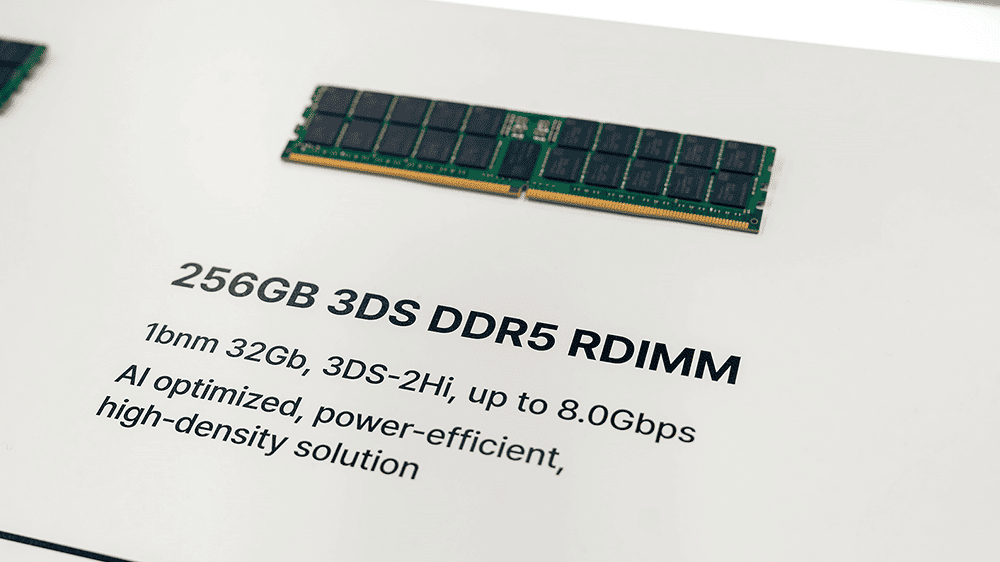

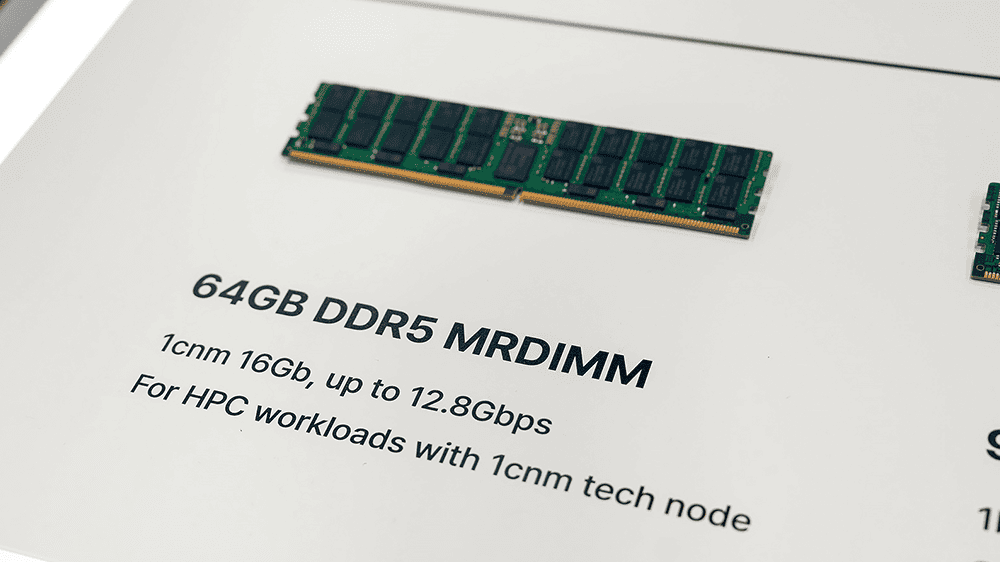

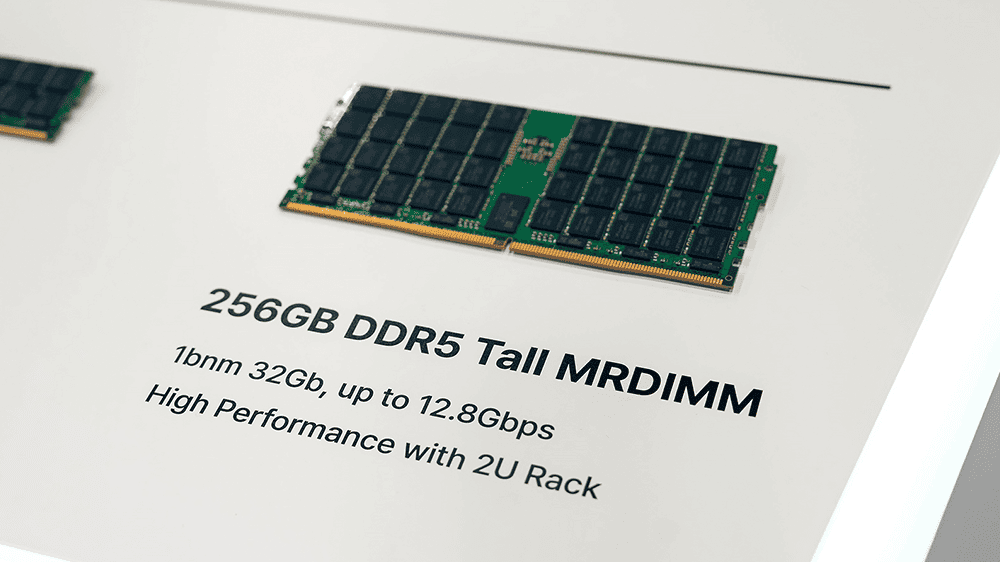

A range of advanced DDR5-based server DRAM modules

The DRAM section featured DDR5-based modules for the next-generation server market. These included RDIMM3 and MRDIMM4 products which leverage the 1c5 node, the sixth generation of the 10 nm process technology, as well as 256 GB 3DS6 DDR5 RDIMM and 256 GB DDR5 Tall MRDIMM. These solutions deliver faster speeds and greater power efficiency in high-performance system environments, supporting stable operation of servers and data centers.

3Registered Dual In-line Memory Module (RDIMM): A server memory module product in which multiple DRAM chips are mounted.

4Multiplexed Rank Dual In-line Memory Module (MRDIMM): A product with enhanced speed by simultaneously operating two ranks — the basic operating units of the module.

51c: The 10 nm process technology has progressed through six generations: 1x, 1y, 1z, 1a, 1b, and 1c.

63D Stacked Memory (3DS): A high-performance memory in which two or more DRAM chips are interconnected using TSV technology.

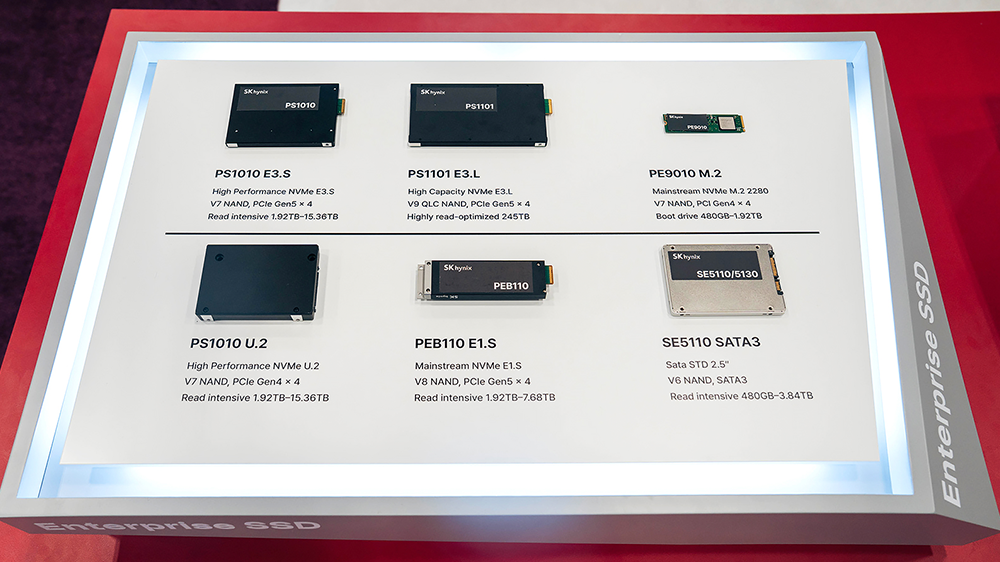

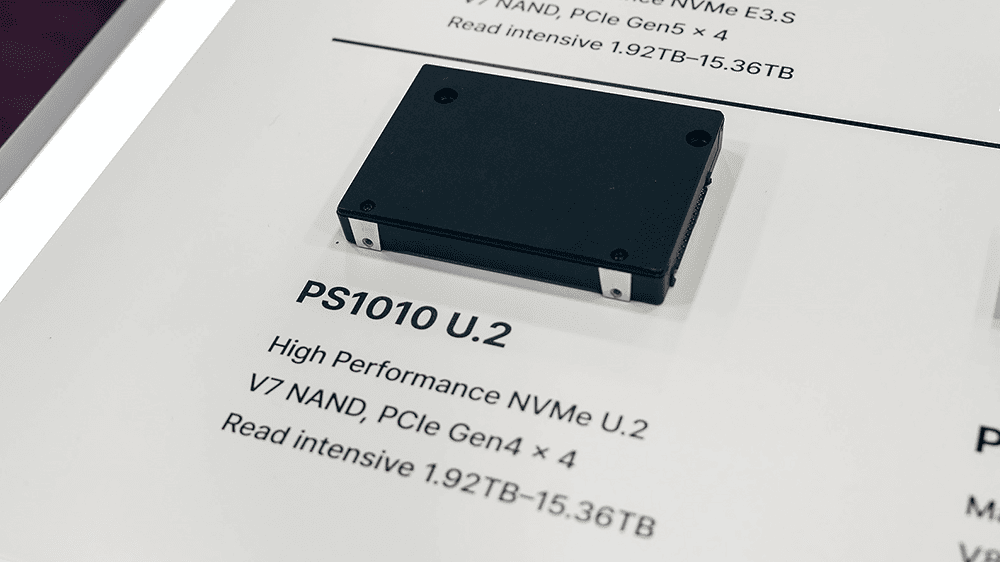

High-performance eSSD products offering large capacity

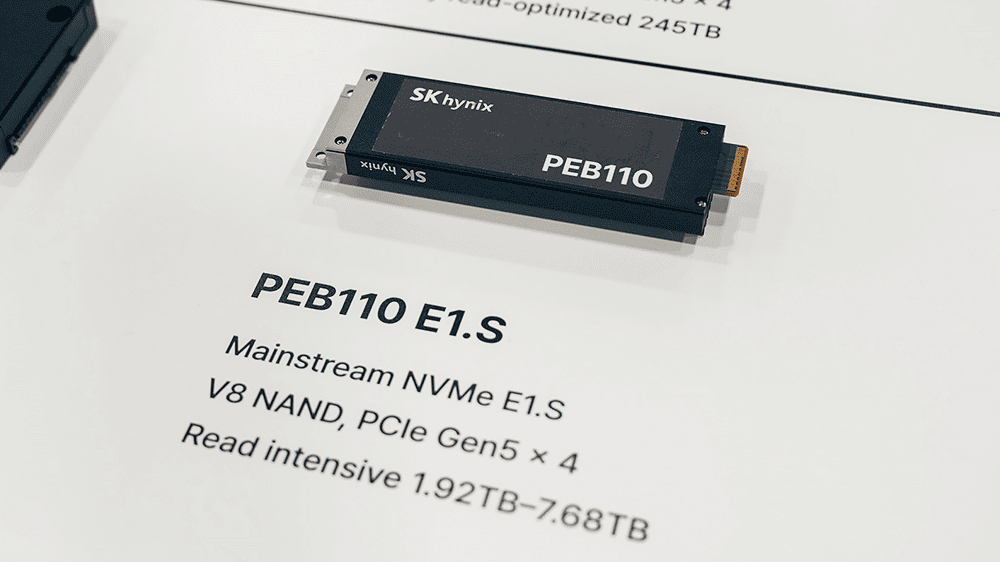

SK hynix also presented a range of high-capacity and high-performance eSSDs. Among the solutions on display were PS1010 E3.S and PE9010 M.2 based on 176-layer 4D NAND, along with PEB110 E1.S built on 238-layer NAND. The section also featured PS1012 U.2 based on QLC7 NAND, as well as the 245 TB PS1101 E3.L built on the industry’s highest 321-layer QLC NAND.

7Quad-level cell (QLC): NAND flash is classified based on how many data bits can be stored in one cell, the smallest unit of storage. NAND flash is categorized as single-level cell (SLC), multi-level cell (MLC), triple-level cell (TLC), QLC, and penta-level cell (PLC).

These products not only provide large capacity but also support rapid data processing through the high-speed I/O interfaces PCIe 4.0 and 5.0. In addition, SK hynix presented its storage portfolio supporting a wide range of server environments, including SE5110 which uses the SATA3 interface commonly applied in entry-level servers and PCs.

Alongside product exhibits, SK hynix also conducted demonstrations of next-generation solutions, highlighting their applications in future technologies. First, a heterogeneous memory-based system composed of CXL8 Memory Module-DDR5 (CMM-DDR5) and MRDIMM was demonstrated in collaboration with semiconductor design specialist Montage Technology. This system highlighted the scalability of memory capacity as well as the improvements in overall system performance.

8Compute Express Link (CXL): An interface technology that efficiently connects memory, processors, and other components in a system, extending the limits of bandwidth and capacity.

Another demonstration featured the CXL Memory Module Accelerator (CMM-Ax), which integrates compute capabilities into memory, showing its potential for integration with Meta’s vector search engine, Faiss9. The successful application of CMM-Ax in SK Telecom’s Petasus AI Cloud further underscored its prospective use in future AI infrastructure.

9Facebook AI Similarity Search (FAISS): A vector search engine developed by Meta. Unlike traditional keyword-based search, which requires exact commands, it understands the meaning and context of queries based on vectors — numerical representations of data such as text or images — and returns highly relevant results.

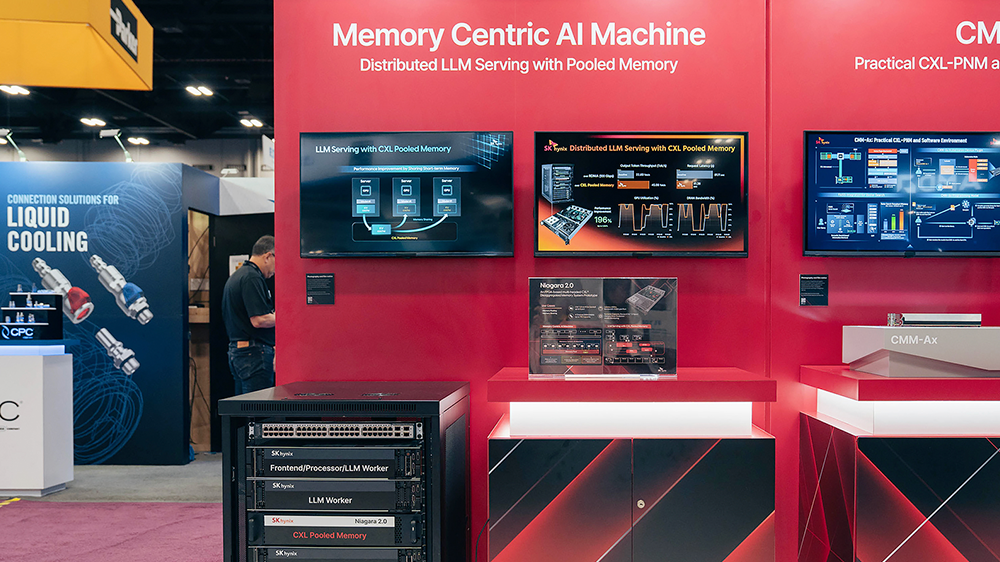

In addition, a memory-centric AI machine based on CXL pooled memory10 was demonstrated, connecting multiple servers and GPUs without a network and supporting distributed inference tasks for large language models (LLMs).

10CXL Pooled Memory: A technology that allows multiple hosts (CPUs and GPUs) to share memory capacity and data, improving efficiency.

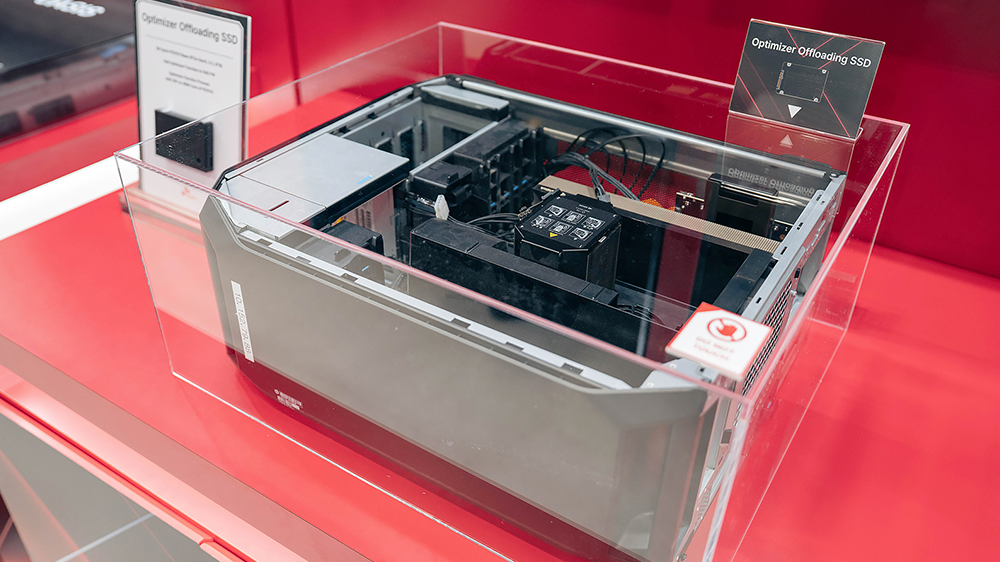

SK hynix also demonstrated OASIS (Object-based Analytics Storage for Intelligent SQL Query Offloading), a next-generation storage system based on data-aware CSD11. The system was applied to HPC applications developed by the Los Alamos National Laboratory12, resulting in a significant improvement in data analysis performance. Furthermore, the Optimizer Offloading SSD drew attention for maximizing GPU efficiency by performing optimizer13 computations directly within the storage during AI training.

11Data-Aware Computational storage drive (Data-Aware CSD): A storage device that can recognize, analyze, and process data on its own.

12Los Alamos National Laboratory (LANL): A national research and development center under the U.S. Department of Energy, conducting research in various areas such as national security, nuclear fusion, and space exploration. It is especially known for conducting scientific research during World War II for the Manhattan Project, an allied effort that developed the world’s first atomic weapons.

13Optimizer: One of the stages in AI model training that improves performance by adjusting the model’s parameters to minimize the loss function, which indicates how far the results deviate from the target.

A Vision for Next-Gen Storage: Revolutionizing Data Analysis Efficiency

Technical Leader Soonyeal Yang of Solution SW delivering a presentation

In a presentation session, SK hynix presented its direction for improving data analysis efficiency and advancing storage innovation in HPC environments. Technical Leader Soonyeal Yang of Solution SW delivered a presentation titled “Proposal for OASIS: An Interoperable and Standards-Based Computational Storage System to Accelerate Data Analytics in HPC.”

Yang stated that inefficiencies in the I/O channels during HPC-based data analysis causes overall system performance degradation and increased costs. He emphasized that OASIS will flexibly distribute such computational loads and greatly contribute to system optimization.

At SC25, SK hynix showcased both future-oriented solutions and its vision for the upcoming AI infrastructure era. Moving forward, the company will continue to act proactively in a rapidly changing environment and work closely with global customers to become a full-stack AI memory creator, leading the AI and HPC sectors.