SK hynix showcased its full-stack AI memory portfolio for enhancing AI and data center infrastructure at the 2025 OCP Global Summit in San Jose, California from October 13–16.

The OCP Global Summit is a conference hosted by the Open Compute Project (OCP), the world’s largest open data center technology collaboration community. Held under the theme “Leading the Future of AI”, this year’s event welcomed experts from leading global companies and developers to share the latest trends in data center and AI infrastructure and discuss developing industry solutions.

Visitors gather at SK hynix’s booth

Under the slogan “MEMORY, Powering AI and Tomorrow,” SK hynix presented a range of innovative technologies which improve the performance and efficiency of AI infrastructure. The company’s interactive booth was organized into four sections — HBM1, AiM2, DRAM, and eSSDs3 — and featured product-based character designs, 3D models of key technologies, and live demonstrations.

1High Bandwidth Memory (HBM): A high-value, high-performance memory product which vertically interconnects multiple DRAM chips and dramatically increases data processing speed in comparison to conventional DRAM products. There are six generations of HBM, starting with the original HBM followed by HBM2, HBM2E, HBM3, HBM3E, and HBM4.

2Accelerator-in-Memory (AiM): A next-generation solution that integrates computational capabilities into memory.

3Enterprise Solid State Drive (eSSD): An enterprise-grade SSD used in servers and data centers.

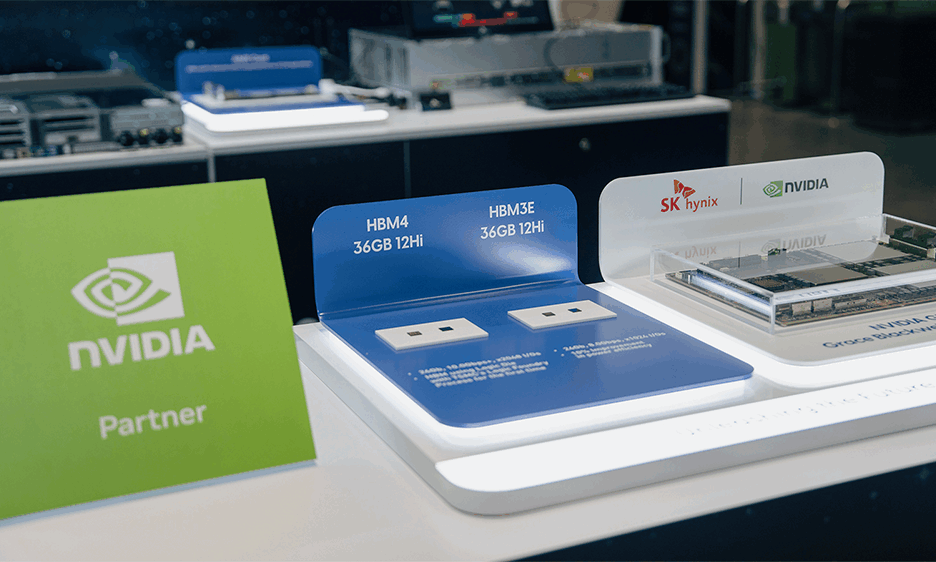

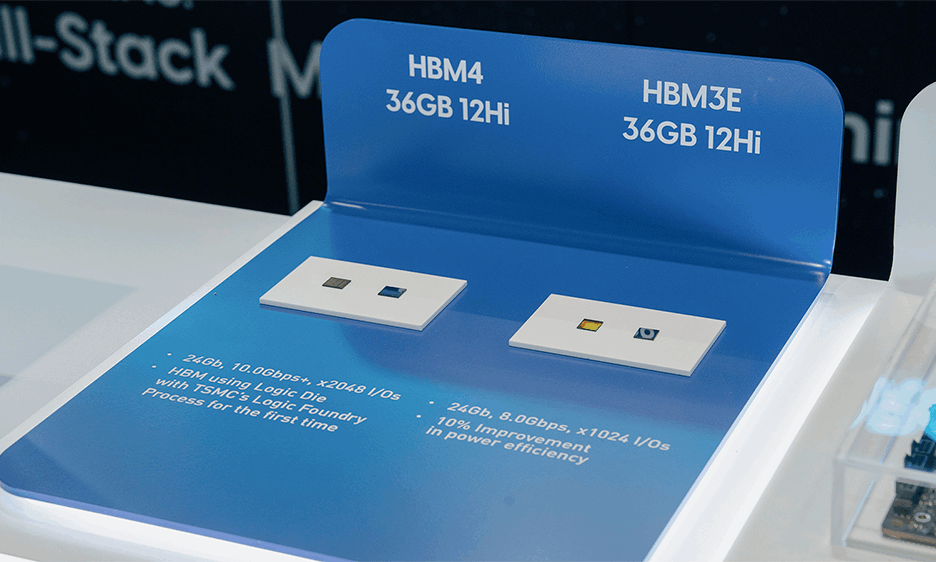

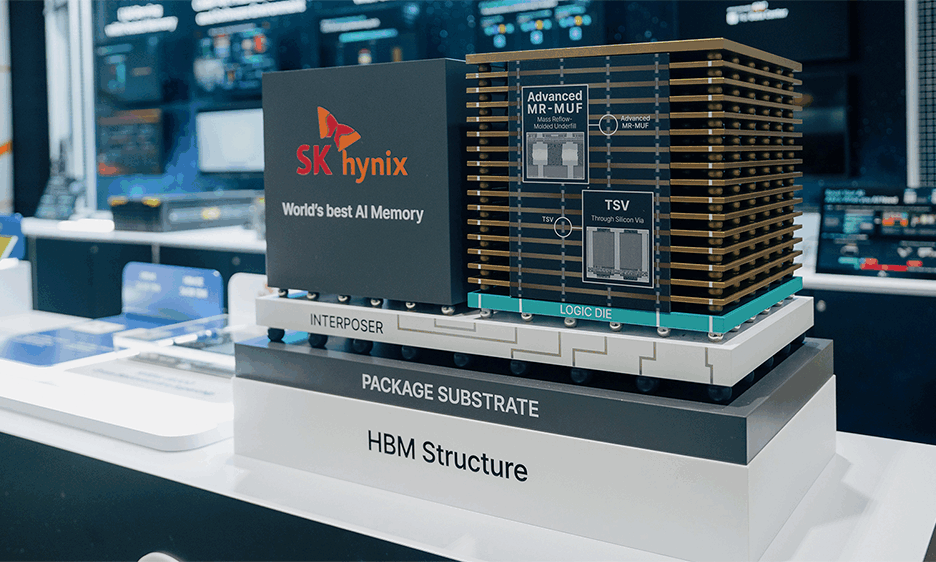

The centerpiece of SK hynix’s booth was its groundbreaking 12-layer HBM4. In September 2025, the company became the first in the world to complete development of HBM4 and establish the product’s mass production system. Featuring 2,048 input/output (I/O) channels, twice that of the previous generation, HBM4 offers increased bandwidth and over 40% greater power efficiency. These specifications make it optimized for ultra-high-performance AI computing systems.

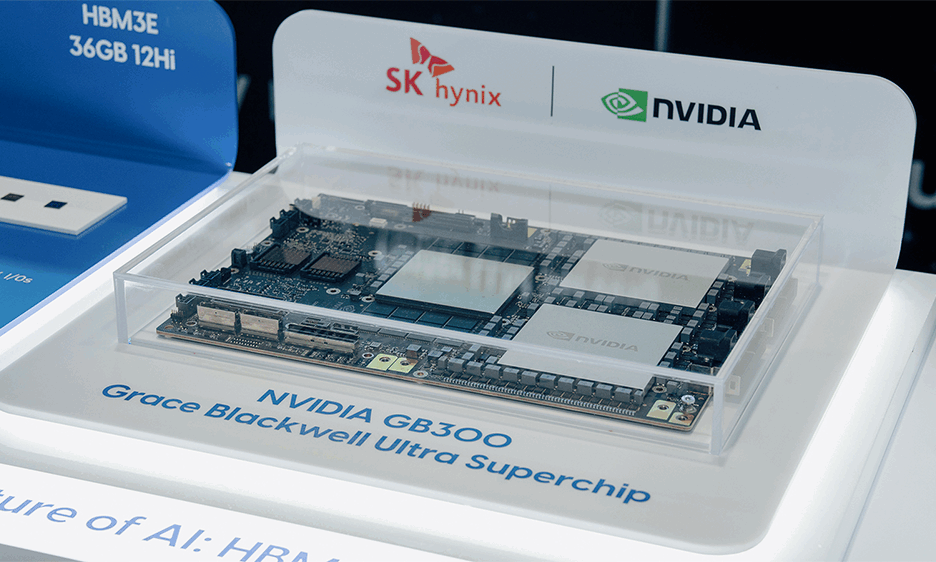

The company also displayed its 36 GB HBM3E, the industry’s highest capacity HBM available on the market, applied to NVIDIA’s next-generation GB300 Grace™ Blackwell GPU. Next to the display, there was a 3D model that helped visitors better the advanced packaging technologies used in HBM such as TSV4 and Advanced MR-MUF5.

4Through silicon-via (TSV): A technology that connects vertically stacked DRAM chips by drilling thousands of microscopic holes through the silicon and linking the layers with vertical electrodes.

5Advanced Mass Reflow-Molded Underfill (MR-MUF): A next-generation MR-MUF technology which offers enhanced warpage control, enabling stacking of chips 40% thinner than previous generations. With new protective materials, it also provides improved heat dissipation.

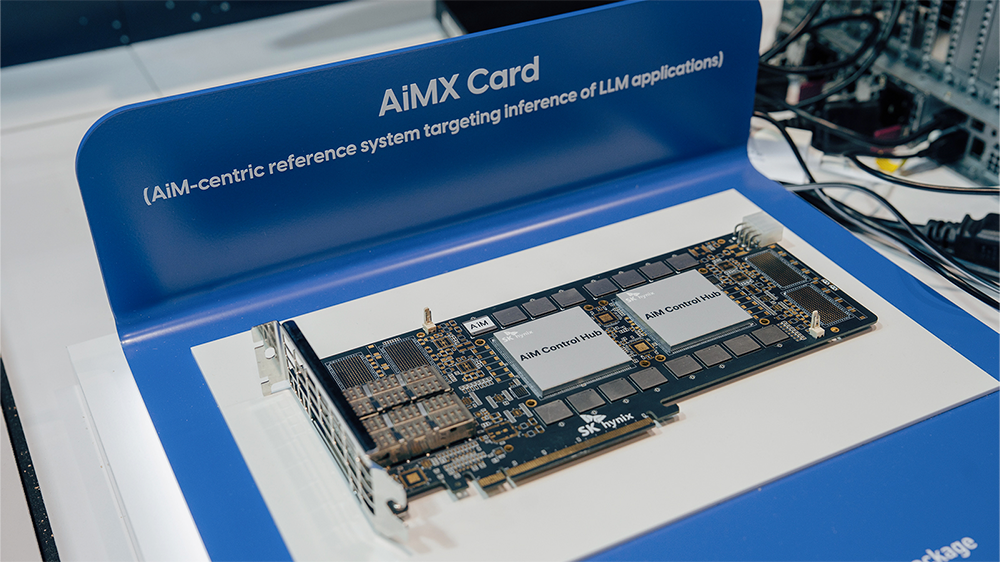

In the AiM section, SK hynix ran a live demonstration of its AiMX6 card which includes multiple GDDR6-AiM chips. The demonstration featured a server equipped with four AiMX cards and two NVIDIA H100 GPUs, highlighting how AiMX is optimized for attention7 operations — the core computation in LLMs8 — by enhancing the speed and efficiency of memory-bound9 workloads. AiMX also improves KV-cache10 utilization during long question-and-answer sequences, mitigating the memory wall11 issue. During the demonstration, Meta’s Llama 3 LLM was run on a vLLM12, showing that multiple users can simultaneously and seamlessly access a chatbot service. Through this, visitors could witness first-hand the technical excellence of SK hynix’s AiM products.

6AiM-based Accelerator (AiMX): SK hynix’s accelerator card featuring a GDDR6-AiM chip which is specialized for large language models (LLMs) — AI systems such as ChatGPT trained on massive text datasets.

7Attention: An algorithmic technique that dynamically determines which parts of input data to focus on.

8Large language model (LLM): An AI model trained on massive datasets that understands and generates natural language processing tasks.

9Memory-bound: A situation in which system performance is limited not by the processor’s computational power but by memory access speed, causing delays while waiting for data access.

10Key-Value (KV) cache: A technology that stores and reuses previously computed Key and Value vectors, preserving context from earlier inputs to reduce redundant calculations and respond more efficiently.

11Memory wall: A bottleneck that occurs when memory access speed lags behind data processor speed.

12Virtual large language model (vLLM): An AI framework designed for efficient and optimized LLM inference.

CXL-based solutions enable flexible expansion of system memory capacity and bandwidth

SK hynix’s booth also featured various CXL13-based solutions that allow flexible expansion of system memory capacity and bandwidth. These included a distributed LLM inference system that connects multiple servers and GPUs without a network using CXL pooled memory, which allows multiple processors, or hosts, to flexibly share memory. The company also showcased the performance of its CXL Memory Module–Accelerator (CMM-Ax), which adds computing capability to CXL memory, by applying it to SK Telecom’s Petasus AI Cloud.

13Compute Express Link (CXL): A next-generation solution designed to integrate CPU, GPU, memory and other key computing system components — enabling unparalleled efficiency for large-scale and ultra-high-speed computations.

In addition, SK hynix verified the performance benefits of a tiering solution which helps utilize various types of memory in an optimal configuration. The solution was enabled by CHMU14, which was first introduced in the CXL 3.2 standard. A separate demonstration drew significant attention for highlighting the enhanced prompt caching15 capabilities of LLM service systems based on CMM-DDR5, resulting in shorter response times to user requests.

14CXL Hot-range Monitoring Unit (CHMU): A technology that monitors the usage frequency of multiple memory devices to support efficient system operation and device optimization.

15Prompt caching: A technology that stores previously processed prompts in AI models to shorten processing time and reduce costs during repeated use.

In the DRAM section, SK hynix presented its DDR5-based module lineup targeting the next-generation server market. In particular, the display drew attention for featuring a diverse product portfolio with a range of capacities and form factors. These included RDIMM16 and MRDIMM17 products which leverage the 1c18 node, the sixth generation of the 10 nm process technology, as well as 3DS19 DDR5 RDIMM and DDR5 Tall MRDIMM.

16Registered Dual In-line Memory Module (RDIMM): A server memory module product in which multiple DRAM chips are mounted on a substrate.

17Multiplexed Rank Dual In-line Memory Module (MRDIMM): A server memory module product with enhanced speed by simultaneously operating two ranks — the basic operating units of the module.

181c: The 10 nm DRAM process technology has progressed through six generations: 1x, 1y, 1z, 1a, 1b, and 1c.

193D Stacked Memory (3DS): A high-performance memory solution in which two or more DRAM chips are packaged together and interconnected using TSV technology.

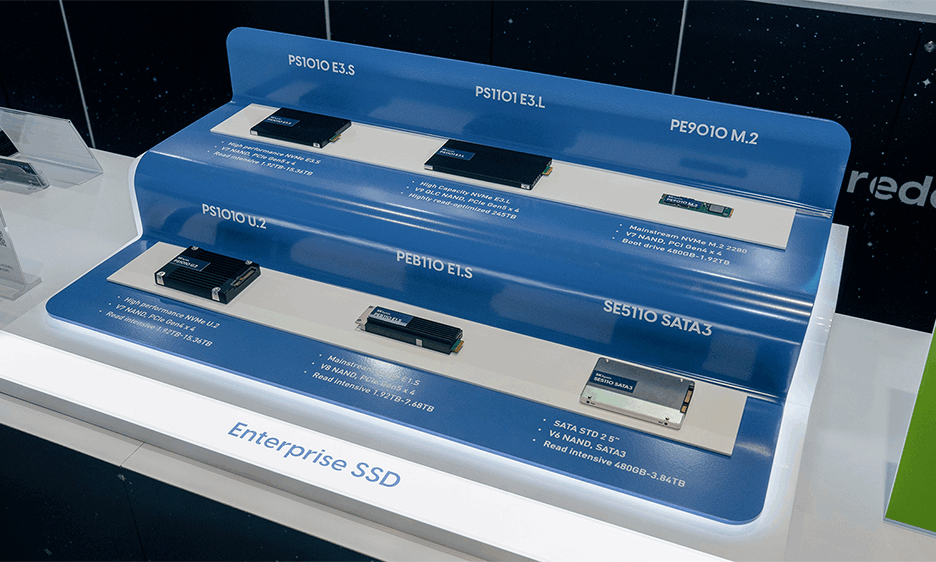

Various eSSD products displayed in the eSSD section

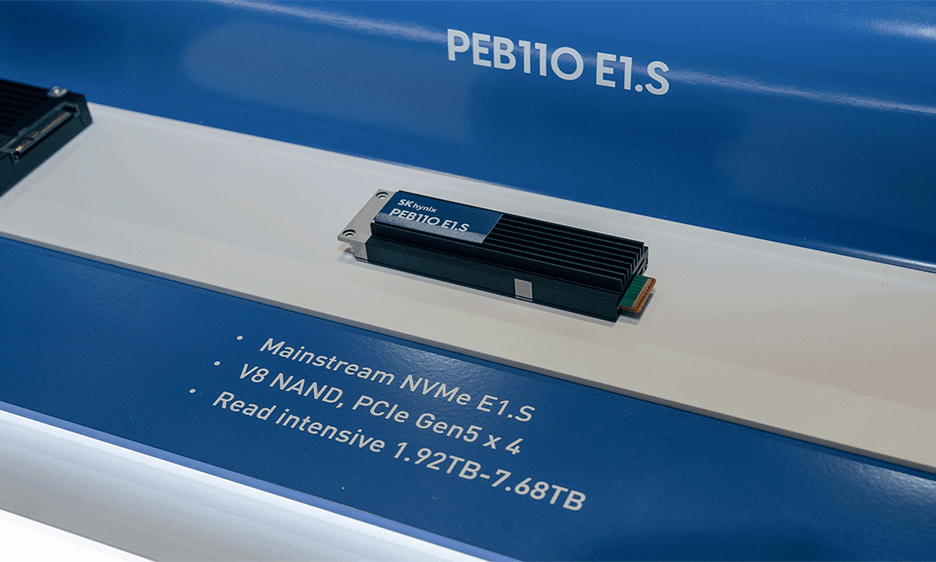

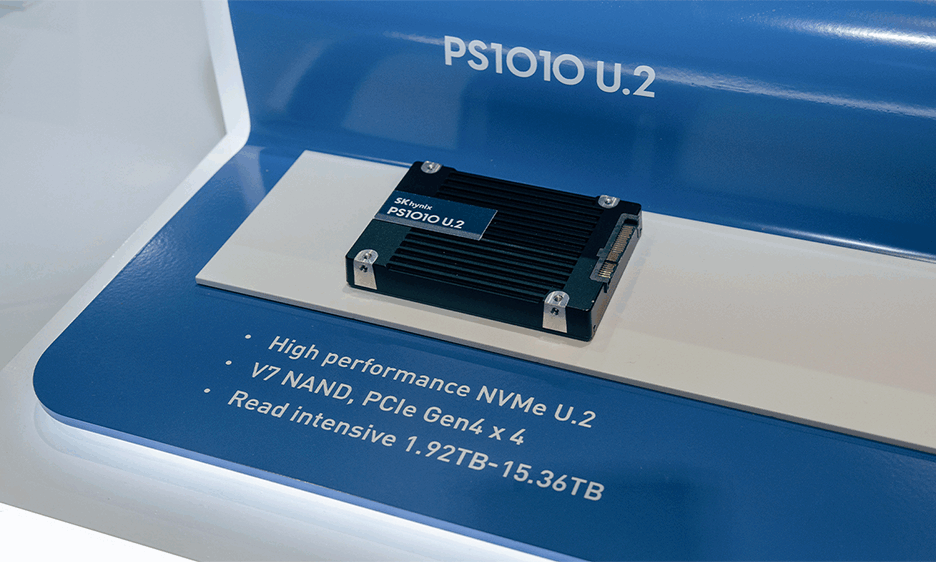

The eSSD section featured various storage solutions optimized for servers and data centers. These included the PS1010 E3.S and U.2 models based on 176-layer 4D NAND and PEB110 E1.S built on 238-layer 4D NAND. In addition, the company showcased its 245 TB PS1101 E3.L — an ultra-high-capacity model built on the industry’s highest 321-layer QLC20. The eSSD lineup is designed to meet diverse server environments and performance requirements, ranging from high-performance PCIe Gen 5 NVMe21 to SATA22 interfaces for compact servers.

20Quad-level cell (QLC): A type of memory cell used in NAND flash that stores four bits of data in a single cell. NAND flash is categorized as single-level cell (SLC), multi-level cell (MLC), triple-level cell (TLC), QLC, and penta-level cell (PLC) depending on how many data bits can be stored in one cell.

21Non-Volatile Memory express (NVMe): A data transfer protocol that maximizes SSD performance by utilizing PCIe, a high-speed serial communication interface.

22Serial ATA(SATA): A traditional interface widely used in hard drives and SSDs. Although it offers lower speeds compared to NVMe, it provides high compatibility and stability, making it suitable for small-scale servers and entry-level PCs.

As well as displaying its groundbreaking products, SK hynix shared its next-generation storage strategy in presentation sessions. Vice President Chunsung Kim, head of eSSD Product Development, gave a presentation titled “Beyond SSD: SK hynix AIN Family Redefining Storage as the Core Enabler of AI at Scale.” During the talk, Kim introduced high-capacity, high-performance solutions for the AI era and outlined strategies to strengthen product competitiveness. Meanwhile, “Thomas” Wonha Choi of Next-Gen Memory & Storage presented a talk titled “Conceptualizing Next Generation Memory & Storage Optimized for AI Inference.” His session proposed directions to meet performance and power needs in line with new market conditions and customer demand.

At the OCP Global Summit 2025, SK hynix took another step forward in reinforcing global collaboration and advancing its technological strategy. Building on this momentum, the company will continue to deliver innovative solutions for the rapidly evolving AI infrastructure landscape as it evolves into a full-stack AI memory provider.