SK hynix showcased its next-generation memory semiconductor technologies and solutions at the OCP Global Summit 2023 held in San Jose, California from October 17–19.

The OCP Global Summit is an annual event hosted by the world’s largest data center technology community, the Open Compute Project (OCP), where industry experts gather to share various technologies and visions. This year, SK hynix and its subsidiary Solidigm showcased advanced semiconductor memory products that will lead the AI era under the slogan “United Through Technology”.

▲ Figure 1. SK hynix’s exhibition booth at the OCP Global Summit 2023

At the Booth: Leading Global AI Memory Technologies in the Spotlight

▲ Figure 2. HBM(HBM3/HBM3E), MCR DIMM, DDR5 RDIMM, and LPDDR CAMM products on display at SK hynix’s booth

SK hynix presented a broad range of its solutions at the summit, including its leading HBM1(HBM3/3E), CXL2, and AiM3 products for generative AI. The company also unveiled some of the latest additions to its product portfolio including its DDR5 RDIMM, MCR DIMM, enterprise SSD (eSSD), and LPDDR CAMM4 devices.

1High Bandwidth Memory (HBM): A high-value, high-performance product that possesses much higher data processing speeds compared to existing DRAMs by vertically connecting multiple DRAMs with through-silicon via (TSV).

2Compute Express Link (CXL): A next-generation memory solution that increases the memory bandwidth of server systems with traditional DRAM products to improve performance and easily expand memory capacity.

3Accelerator in Memory (AiM): SK hynix’s PIM semiconductor product name, which includes GDDR6-AiM.

4Low Power Double Data Rate Compression Attached Memory Module (LPDDR CAMM): A solution developed based on the LPDDR package in line with the next-generation memory standard (CAMM) for laptops and mobile devices. Compared to conventional So-DIMM modules, LPDDR CAMM has a single-sided configuration which is half as thick and offers improved capacity and power efficiency.

Visitors to the HBM exhibit could see HBM3, which is utilized in NVIDIA’s H100, a high-performance GPU for AI, and also check out the next-generation HBM3E. Due to their low-power consumption and ultra-high-performance, these HBM solutions are more eco-friendly and are particularly suitable for power-hungry AI server systems.

▲ Figure 3. CXL-based CMS 2.0, pooled memory, and memory expander solutions being demonstrated at the event

Three of SK hynix’s key CXL products were demonstrated at the event, including its CXL-based computational memory solution (CMS)5 2.0. A next-generation memory solution that integrates computational functions into CXL memory, CMS 2.0 uses NMP6 to minimize data movement between the CPU and memory. CMS 2.0 was applied to SK Telecom’s spatial data analysis and visualization solution based on Lightning DB which analyzes foot traffic in real time, illustrating CMS 2.0’s data processing power equivalent to a server CPU. Moreover, the demonstration highlighted that CMS 2.0 can improve data processing performance and energy efficiency.

5Computational Memory Solution (CMS): A memory solution that offers the functions of machine learning and data filtering, which are frequently performed by big data analytics applications. Just like CXL, CMS’s memory capacity is highly scalable.

6Near-Memory Processing (NMP): A memory architecture which moves the compute capability next to the main memory to address CPU memory bottlenecks and improve processing performance.

The company also demonstrated its CXL-based pooled memory solution which can significantly reduce idle memory usage and shorten the overhead time of data movement in distributed processing environments such as AI and big data. The demonstration, which featured a technological collaboration with software provider MemVerge, showcased how CXL-based pooled memory solutions can improve system performance in AI and big data distributed processing systems.

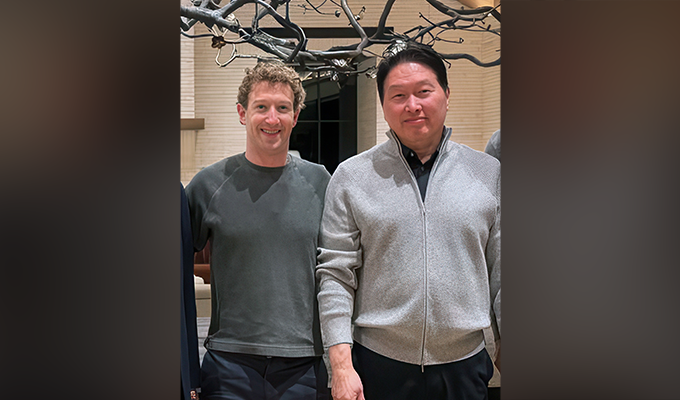

Additionally, SK hynix demonstrated its CXL-based memory expander applied to Meta’s software caching engine, CacheLib, which showed how the CXL-based memory solution optimizes software for improved performance and cost savings.

▲ Figure 4. PIM and AiMX on display at the summit

AiM, a game-changing technology that redefines memory’s role in AI inference, was also presented at the booth. A subset of SK hynix’s PIM7 portfolio, AiM promises to revolutionize machine learning, high-performance computing, and big data applications while reducing operating costs. The company demonstrated its GDDR6-AiM, a solution which incorporates computational capabilities into the memory and improves the performance and efficiency of data-intensive generative AI inference systems. Additionally, a demonstration was held for the prototype of AiMX8, an innovative generative AI accelerator card based on GDDR6-AiM.

7Processing-In-Memory (PIM): An advanced technology that combines computational capabilities and memory within just one die to perform the best computing capability.

8Accelerator-in-Memory based Accelerator (AiMX): SK hynix’s accelerator card featuring a GDDR6-AiM chip which is specialized for large language models.

▲ Figure 5. PS1010 E3.S, a PCIe Gen5 eSSD product, showcased at the booth

SK hynix also showcased the PS1010 E3.S, a PCIe (Peripheral Component Interconnect Express) Gen5-based eSSD. Designed for data-intensive applications, cloud computing, and AI workloads, the PS1010 E3.S promises improved performance and reliability with superior speed and lower carbon emissions compared to previous generations.

At the Sessions: Sharing Industry Insights & Key Technologies

▲ Figure 6. SK hynix’s Hoshik Kim, fellow of the System Architecture Group in Memory Forest x&D, holds a session on CXL technology

Through thought-provoking talks and sessions at the summit, SK hynix also suggested the future development of next-generation memory solutions.

In a panel session titled “Data Central Computing, Present and Future”, Hoshik Kim, fellow of the System Architecture Group in Memory Forest x&D, joined other industry experts to omputing and programming models. Kim also held an executive session in which he revealed SK hynix’s progress in developing CXL technology and shared the company’s vision under the theme of “CXL: A Prelude to a Memory-Centric Computing”.

▲ Figure 7. SK hynix’s Youngpyo Joo, Dongwuk Moon and Jungmin Choi of Memory Forest x&D giving talks on CXL-based solutions

The potential of CXL was also the focal point of three other sessions held by the company’s employees from the Memory x&D department. Youngpyo Joo, head of the Software Solutions Group, explored how CXL-based computational memory solution architecture can offer increased usability and flexibility.

Meanwhile, Donguk Moon, technical leader of the Platform Software team, introduced how emerging CXL devices can enhance memory capacity and bandwidth at the caching layer for technologies such as AI and web services. In addition, Jungmin Choi, technical leader of the Composable Memory team, covered how the latest CXL technology will further support the disaggregation of memory. Choi also emphasized that this technology can not only solve problems currently faced by data centers such as idle memory, but also improve system performance.

▲ Figure 8. Euicheol Lim, head of SK hynix’s Memory Solution Product Design Group, discusses PIM-based AiM and PIM technologies

In another engaging session, Euicheol Lim, head of the Memory Solution Product Design Group, explored the potential for PIM technology to meet the high memory and computational demands of AI. Lim highlighted key technologies based on PIM, including SK hynix’s PIM-based AiM accelerator.

Advanced Solutions to Unlock Next-Generation Technologies

As the OCP Global Summit 2023 drew to a close, SK hynix reinforced its commitment to developing groundbreaking solutions which can help realize advanced technologies such as AI. Going forward, the company will continue to tackle industry challenges and make technological breakthroughs for the AI era as the global no.1 AI memory solution provider.