FMS 2024 features a broader focus on different memory types compared to previous years

SK hynix has returned to Santa Clara, California to present its full array of groundbreaking AI memory technologies at FMS: the Future of Memory and Storage (FMS) 2024 from August 6–8. Previously known as Flash Memory Summit, the conference changed its name to reflect its broader focus on all types of memory and storage products amid growing interest in AI. Bringing together industry leaders, customers, and IT professionals, FMS 2024 covers the latest trends and innovations shaping the memory industry.

Participating in the event under the slogan “Memory, The Power of AI,” SK hynix is showcasing its outstanding memory capabilities through a keynote presentation, multiple technology sessions, and product exhibits.

Keynote Presentation: Envisioning the Future of AI With Leading Memory & Storage Solutions

Keynote presentations have always been a highlight at FMS. The talks act as an important forum for attendees to learn about emerging memory and storage technologies from industry leaders. Due to its leadership in the AI memory field, SK hynix was selected to give a keynote presentation titled “AI Memory and Storage Solution Leadership and Vision for the AI Era” at the newly expanded event.

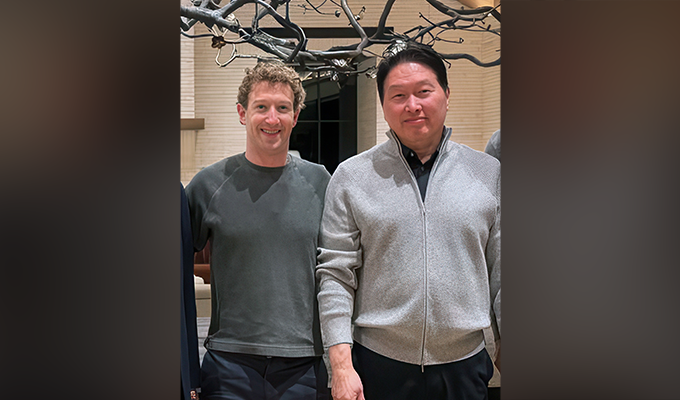

Held on the first day of FMS 2024, the keynote was delivered by Vice President Unoh Kwon, head of HBM Process Integration (PI) and Vice President Chunsung Kim, head of SSD Program Management Office (PMO). The pair provided insights into the company’s DRAM and NAND flash solutions which aim to solve the pain points of generative AI and promote continued development of AI. These pain points include maximizing the efficiency of AI training and inference1 while minimizing floor space and power utilization for storing data.

1AI inference: The process of running live data through a trained AI model to make a prediction or solve a task.

Kwon and Kim delivering their keynote on SK hynix’s leading AI memory products

Each speaker covered a particular type of memory. Kwon touched on the company’s DRAM memory products that are optimized for AI systems such as HBM2, CXL®3, and LPDDR5T4. Meanwhile, Kim introduced the company’s best-in-class NAND flash storage devices including its SSD and UFS5 solutions, which will continue to be crucial for AI applications in the future.

2High Bandwidth Memory (HBM): A high-value, high-performance product that revolutionizes data processing speeds by connecting multiple DRAM chips with through-silicon via (TSV).

3Compute Express Link® (CXL®): A PCIe-based next-generation interconnect protocol on which high-performance computing systems are based.

4Low Power Double Data Rate 5 Turbo (LPDDR5T): Low-power DRAM for mobile devices aimed at minimizing power consumption and featuring low voltage operation. LPDDR5T is an upgraded product of the LPDDR5X and will be succeeded by LPDDR6.

5Universal Flash Storage (UFS): A high-performance interface for computing and mobile systems which require low power consumption.

In addition to its keynote, SK hynix also held five sessions that took a deeper look into its next-generation products set to solidify the company’s AI technology leadership. These sessions covered a variety of topics, including the company’s DRAM, SSD, and CXL solutions.

Product Booth: Presenting the Industry’s Best AI Memory

SK hynix’s booth at FMS 2024 consists of four sections showcasing many of the products which featured in the company’s keynote and session talks. One of the booth’s highlights is the samples of the 12-layer HBM3E, the next-generation AI memory solution which is expected to be mass-produced in the third quarter of 2024. The company is also holding demonstrations of select products with its partners’ systems, highlighting its strong collaboration with various major tech companies.

The AI Memory and Storage section includes industry-leading solutions such as SK hynix’s HBM3E and eSSD portfolio

- AI Memory and Storage: Features SK hynix’s flagship AI memory products such as samples of its 12-layer HBM3E, which is set to be the same height as the previous 8-layer version under JEDEC6 standards. The section also includes GDDR6-AiM7, a product suitable for machine learning due to its computational capabilities and rapid processing speeds, and the ultra-low power LPDDR5T optimized for on-device AI. Storage solutions on display include the PCIe Gen5-based eSSD PS1010, which is ideal for AI, big data, and machine learning due to its rapid sequential read speed.

6Joint Electron Device Engineering Council (JEDEC): A U.S.-based standardization body that is the global leader in developing open standards and publications for the microelectronics industry.

7Accelerator in Memory (AiM): A special-purpose hardware made using processing and computation chips.

At the NAND Tech, Mobile, and Automotive section, attendees can see products such as the company’s 321-layer NAND and ZUFS 4.0

- NAND Tech, Mobile, and Automotive: This section includes solutions such as the world’s highest 321-layer wafer technology as well as triple-level cell (TLC) and QLC NAND. Mobile technologies are also showcased including ZUFS8 4.0, an industry-best NAND product optimized for on-device AI9 which boosts a smartphone’s operating system speed compared to standard UFS.

8Zoned Universal Flash Storage (ZUFS): A NAND flash product that improves efficiency of data management. It optimizes data transfer between an operating system and storage devices by storing data with similar characteristics in the same zone of the UFS.

8On-device AI: A technology that implements AI functions on the device itself, instead of going through computation by a physically separated server.

The AI PC and CMS 2.0 section features a demonstration of the company’s industry-leading SSD, PCB01

- AI PC and CMS 2.0: Attendees can see a system demonstration of the industry-best SSD PCB01, which is able to efficiently process large AI computing tasks when applied to on-device AI PCs. In addition, CMS10 2.0, a next-generation memory solution that boasts equivalent data processing capabilities as a CPU, is applied to a vector database11.

10Computational Memory Solution (CMS): A product that integrates computational functions into CXL memory.

11Vector Database: A collection of data stored as mathematical representations, or vectors. As similar vectors are grouped together, vector databases can make low-latency inquiries, making them ideal for AI.

The OCS, Niagara, CMM section displays innovative solutions such as Niagara 2.0 and HMSDK

- OCS, Niagara, and CMM: This section features a demonstration of OCS12 technology which enhances the data analysis and pooled memory solution Niagara 2.013. It also includes a demonstration of CMM14– DDR515, which expands system bandwidth by 50% and capacity by up to 100% compared to systems only equipped with DDR5.

12Object-based Computational Storage (OCS): A new computational storage platform for data analytics in high-performance computing. OCS has not only high scalability but also data-aware characteristics that enable it to perform analytics independently without help from compute nodes.

13Niagara 2.0: A solution that connects multiple CXL memories together to allow numerous hosts such as CPUs and GPUs to optimally share their capacity. This eliminates idle memory usage while reducing power consumption.

14CXL Memory Module (CMM): A new standardized interface that has an advantage in scalability and helps increase the efficiency of CPUs, GPUs, accelerators, and memory.

15Double Data Rate 5 (DDR5): A server DRAM that effectively handles the increasing demands of larger and more complex data workloads by offering enhanced bandwidth and power efficiency compared to the previous generation, DDR4.

Super Women Conference: Cherishing Diversity in the Memory & Storage Industry

Haesoon Oh delivers the keynote at the FMS Super Women Conference

SK hynix also co-sponsored the FMS Super Women Conference, an event held on the sidelines of FMS 2024 which celebrates the achievements of female leaders and promotes diversity in the memory industry. Head of NAND Advanced PI Haesoon Oh, the company’s first female executive-level research fellow, delivered a keynote address on the company’s next-generation innovations and the importance of understanding diversity.

Paving the Way Forward in the AI Era

At FMS 2024, SK hynix underlined its commitment to lead the industry by providing integrated AI memory solutions and expanding its expertise in the sector. Collaborating with other leading partners, the company will strive to provide customers with the best possible solutions that match their rapidly changing needs.