Positioning technology in our world

Navigation has become a quintessential part in our daily lives. Smartphones double as car navigation devices, smartwatches can be a hiking trail guide and so on. But how does a device really know where we are? The most common technology is the GPS, the Global Positioning System.

Hundreds of kilometers above our heads, GPS satellites are orbiting the Earth and radiating electromagnetic (EM) signals. By detecting the minute differences in arrival times of those EM signals, a GPS device can pinpoint where someone is standing on the planet. And while the technology is essentially free and requires no subscription, it does require a device that can read GPS signals.

The core of this technology is the satellites themselves, something external that we cannot control. Without the satellites, GPS technologies are of little value. Line of sight to the satellites (even though we cannot see them with our eyes) are critical to the technology’s functionality, which is why GPS navigation frequently fails in tunnels, parking garages, mountainous regions with lush forestry and tall trees, or in crowded cities with skyscrapers. GPS signals can also be attacked and jammed by a 3rd party. When it works, however, GPS provides a relatively accurate result. Overlaying the current ‘position’ estimated by the GPS on top of a map creates a base for a navigator. The remaining job is to perform the ‘positioning’ frequently and update the display.

But does ‘positioning’ generally need a continuous, external aid such as GPS satellites? For humans, the answer is no because we don’t rely on external EM waves to have a morning jog around the neighborhood. Even for a previously unvisited area, if we are armed with a static map – whether in our heads based on previous experiences or a physical paper map – we can position ourselves correctly in that map and navigate to a friend’s new home, for example. Our eyes can perceive how fast we are moving, how far we are from reference points, or how close we are to a decision point such as a turn, landmark or destination. Our body’s ‘positioning’ system is completely integrated and self-sufficient and doesn’t require a continuous aid from an external resource.

Newer electronic devices, such as cars and robot vacuum cleaners, have taken the mimicry of this vision-based approach and even utilize a light spectrum invisible to human eyes, such as infrared, laser and RF waves, for a better ‘visualization’ of the environment. The downside of the vision system, however, is that we need to collect and interpret the data from vision sensors. Inferring direction and speed of a motion from the vision data is not a trivial task. It is a huge computational load that requires powerful processors, as well as large data storage and memory. It also demands a high power and energy consumption. Together, that all adds up to a more expensive system.

Simple approach to location tracking

Is there a simpler approach for positioning without an extreme amount of computation? In theory, we can use one of the most ubiquitous sensors we have around us – an accelerometer. As a motion-based sensor, an accelerometer requires an almost negligible computational load to determine a position in principle, compared to a vision-based approach. At the same time, an accelerometer is extremely inexpensive. The theoretical operating principle behind it is also intuitive and straightforward.

By definition, acceleration is the change in velocity over a time duration (a=Δv/Δt) and velocity is the change in position over a time duration (v=Δs/Δt). Merging these two relationships and generalizing it for a nonlinear movement, acceleration can be related to the position:

This simple relationship tells us that the double-time differentiation of the position must be the acceleration. By holding the positional data over time, we can take the double differentiation and accurately determine the acceleration during that trip, obtaining ‘a’ from ‘s.’

Since this is a mathematical formula, we also can determine ‘s’ from ‘a’ by reversing the calculation. In this instance, we would need a double integration:

In theory, this indicates that we can perform the double integration to obtain the position if we hold the acceleration data over time, such as the data points reported from the accelerometer. This does, however, create the immense challenge of the ‘self-sufficient’ inertial navigation. To better understand, let’s go back to a lesson from the early years of college. Differentiation shrinks the expression inside and eliminates constants while integration grows the expression inside and generates a constant. ‘Double’ differentiation will eliminate up to linear terms, whereas double integration will grow the contents at a tremendously faster rate.

The challenge in this technology is simply due to this double integration and the unavoidable tiny errors in acceleration data samples. For a hypothetical, slight error in position measurement, differentiation would diminish the effect of the error over time, and even more so for the double differentiation (i.e., from s to a). On the other hand, a slight error in acceleration measurement would grow with an integration, and even bigger and quicker with the double integration (i.e., from a to )s. For example, a quantization error, a mechanical bias in an accelerometer, a miscalibration, and even undetectable defects that are under manufacturing tolerances, always exist in the captured acceleration data.

If this acceleration goes through double integration for positioning purposes, these tiny errors are all double-integrated, without bound. If we take this approach, a static object on your desk will have a moving trajectory as soon as the double integration starts. If we watch longer – that is, the integration time gets longer – then the object will continuously accelerate away from you, three-dimensionally. Within a few seconds, the double integration will report that the object has arrived at the Moon. This ‘drift’ due to the integrated error over time is a nightmarish problem for self-sufficient inertial navigation known as ‘Dead-Reckoning.’

How to reduce errors with inertial navigation

There have been efforts to limit how much error can be produced in each sampling, such as an object-dependent physical limitation (e.g., humans cannot move faster than a certain distance per step), and determination of possible motion ranges (e.g., multiple inertial measurement units (IMU) that include accelerometers, gyroscopes, and magnetometers can be placed at multiple locations of the moving object to detect and limit impossible motions.) They are effective to a certain degree as the error is at least bound by the set ‘rules’ would keep it from accelerating away from you at an astronomical speed. Yet, the problem on the snowballing error in integration remains and is fundamentally impeding ‘accurate’ positioning (e.g., a still object).

In order to completely suppress the error, the traditional approach has been the investment of more hardware, especially non-motion-based sensors, such as vision and laser. However, with the involvement of other error-bounding sensors, the benefits of inertial navigation solely with motion-based sensors – low computation complexity, cheaper construction, low power consumption and so on – now dissipates. This has limited the usage of an inertial navigation system mostly to spacecraft and aircraft applications that can afford such requirements for a short period of time, keep the inaccuracy of the positional estimate under certain levels. An inertial navigation system was used, for example, in the Apollo space shuttle and has also been used to supplement flight automation and navigation systems in Boeing 747s and US military aircraft.

Under practical applications, the integration of the acceleration data is routinely carried out in a Kalman filter, where extra sensor outputs such as a gyroscope or magnetometer can further enhance the performance of the positional estimation. When the ‘prediction,’ or ‘estimation,’ is highly nonlinear to the input, such as our double integration, an Extended Kalman filter (EKF) is used. The “error” or “noise” characteristics will be included in the EKF system and considered a natural input to the system. The noise characteristics will be modeled with utmost precision to eliminate (or accurately account for) its effects during the double integration – again, in principle. However, aforementioned ‘tiny’ noises in measurements – such as a quantization error, a mechanical bias in an accelerometer, miscalibration, and even undetectable defects that are under manufacturing tolerances – can be dynamically changing during and between the sensor operations, rendering the precise modeling of such error sources a nearly impossible task.

AI-assisted Dead-Reckoning

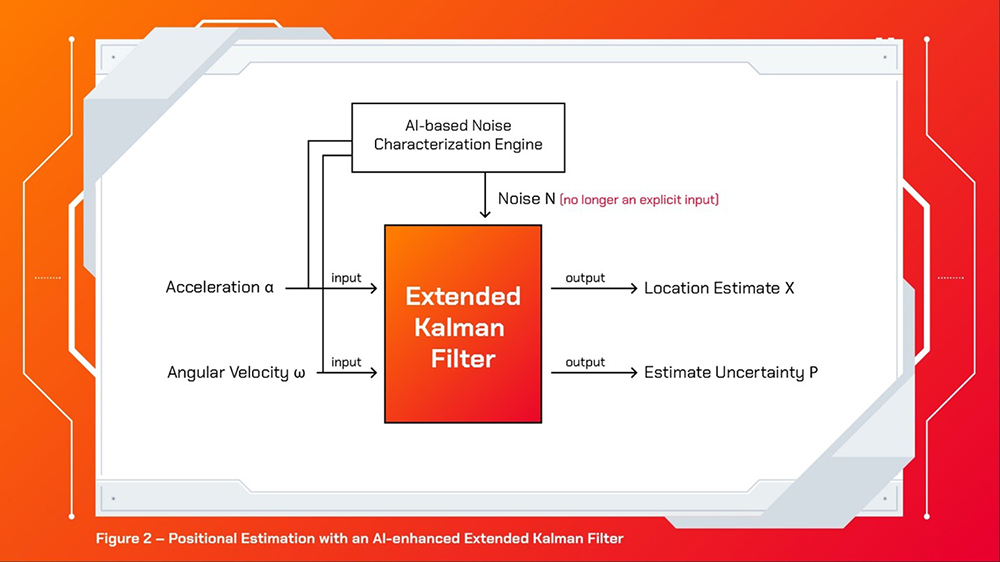

With a recent, strong emergence of Artificial Intelligence (AI) technology and deep neural networks, a great opportunity has surfaced for enabling automatic learning of the ‘noise parameters’ and relevant customizations that are beneficial for the IMU-based self-sufficient inertial navigation. Figure 1 shows the traditional approach with the IMU measurements, noise modelings, and the EKF, whereas Figure 2 illustrates the trending approach without the noise modeling, which fully utilizes the machine learning-based engine for the automatic noise characterization. Figure 3, excerpted from the “AI-IMU Dead-Reckoning” report1, shows a promising result for automotive applications.

For verification purposes, the solid black curve, denoted as “GPS,” is provided as the ground truth. The blue curve, denoted as “IMU,” is the result of the direct double integration of the acceleration. As expected, it suffers from the diverging integration error and veers off from the ground truth in the initial stages. The dashed green curve, denoted as “AI Engine,” is the result with the EKF system, aided by the AI Engine producing the adaptive noise parameters. The AI approach is surprisingly effective and accurate, compared to the ground truth using GPS. An interesting aspect of this plot is that the GPS actually malfunctioned embarrassingly during this trip – denoted in the figure as “GPS outage.” The “ground truth,” in fact, was not the real truth as it could not report accurate position during the outage section. Meanwhile, the AI-enhanced IMU-only dead reckoning presented the precise location during the GPS outage. In fact, this AI-based dead reckoning is even comparable in performance to the LiDAR and powerful vision-based approaches. The physical size, power consumption, and the cost of these powerful positioning systems are unbearably high, comparable to the AI-fueled dead reckoning method.

Please note that Figure 3 is only 2-D implementation of a potentially full 3-axis travel and made at a vehicular level with the relevant units of km, km/h, and meter (resolution). It would pose different requirements in the AI-engine design and sensor capabilities for a human-level and -scale navigation, especially regarding the minimal resolution in the positional estimation. These varieties are actively being investigated by academics.

Impact of positioning technology

The impact and applicability of this technology are immense. Compared to existing navigation technologies, it will enable navigation that is self-sufficient, extremely low-power, tremendously economical and environmentally independent – for example, weather, electromagnetic interference, trees, buildings, line-of-sight and so on. Autonomous moving objects, such as vehicles, robots or bikes, can feature an accurate, self-standing navigation technology on top of other navigation aids at a negligible cost addition. Indoor navigation for humans, pets, carts, and other objects also will have endless combinations of use cases. Likewise, it also can retrofit easily into existing devices, as the location estimation engine is purely at a software level. Already, we have accelerometers and gyroscopes all around us in abundance – via our smartphones. There might even be a cool (or fun) app soon that utilizes this incredible advancement in technology.

The AI software technologies are currently conquering some of the most challenging engineering problems in unexpected ways. Recent advancements in semiconductor technologies have essentially enabled such innovations, providing explosively increasing computational power and memory capacities at lower costs. Hardware manufacturers, including SK hynix, will stay extremely busy keeping up with the never-ending appetites of the AI software technologies on critical hardware equipment, including large data storages for massive amounts of training data for deep neural networks, such as SSD cards integrated with NAND Flash, high-speed and high-capacity memories such as DRAM (DDR4, DDR5, HBM2E, GDDR6 etc.), and fast processors.

1M. Brossard, A. Barrau and S. Bonnabel, “AI-IMU Dead-Reckoning,” in IEEE Transactions on Intelligent Vehicles, vol. 5, no. 4, pp. 585-595, Dec. 2020, doi: 10.1109/TIV.2020.2980758.

ByJinyeong Moon Ph.D.

Assistant Professor

Electrical & Computer Engineering

FAMU-FSU College of Engineering